We upload our pictures to share with the world, edit documents in the cloud, and back up important files that we can access from multiple devices. Our increasing need for cloud storage is driving cloud providers to constantly seek better technology. This post is about “smart cloud” technology, which can reduce costs and enhance the efficiency of the cloud platform while offering powerful tools to system administrators.

First, what do we mean by “smart”? Our understanding of “smart cloud” is two-fold.

- Smart infrastructure: Smart infrastructure not only provides a comprehensive monitoring tool for the hardware, but also provides advanced algorithms that identify anomalies, filter noise, predict events and recommend actions that help cloud providers prevent loss and increase performance.

- Smart tools: The following quote from Rutherford Roger is a great way to summarize the challenges we are facing in the big data era: “We are drowning in information and starving for knowledge.” Many customers with a huge volume of data in their hands are starving for deep insight into the data. To build a smart cloud, it is essential to build an army of smart tools that are specialized for different types of data and customer goals. These tools should help the customers grasp the real knowledge buried beneath their mountains of information.

Among other important aspects, good learning algorithms are the core of building a smart cloud. For the past several decades, scientists from different backgrounds have pioneered research to learn from data, resulting in a rich library of algorithms. Computer scientists have developed tools for artificial intelligence to make robots and self-driving cars. Statisticians have invented statistical learning algorithms to study diseases and economics. Physicists apply learning algorithms to classify galaxies and study particles generated by the Large Hadron Collider.

With ever more powerful computing, we now have access to analytical methods that work in largely automated fashion, can handle massive data, and can receive and process this data in real-time, streaming form. With such powerful tools at our disposal, it can be tempting to let methods drive analyses and give little regard to the nature of the specific variables to be analyzed. As such, now more than ever it is essential for the data scientist to understand the appropriate application of statistical and machine learning methods and make educated choices to select and apply algorithms appropriate for the problem at hand.

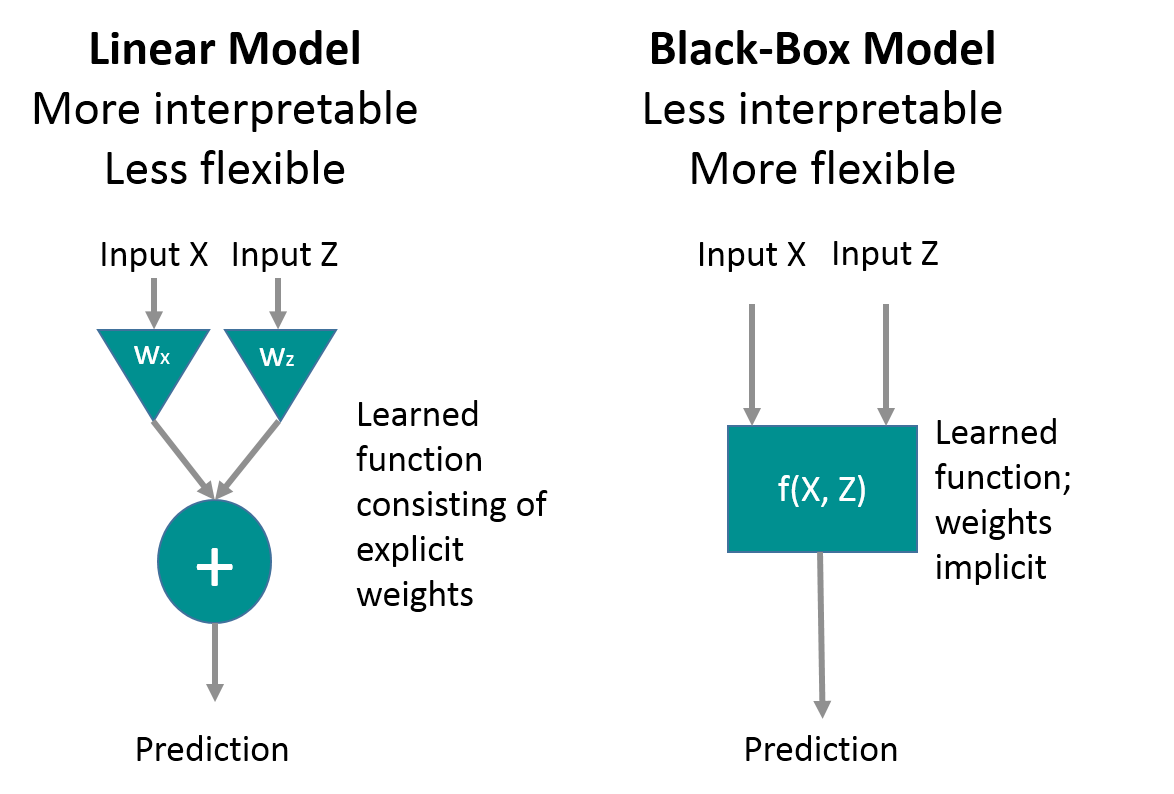

One might frame these choices in terms of the tradeoff between flexibility and interpretability.1 Statistical learning methods vary in the degree to which they facilitate either of these characteristics; a choice of method, then, should be informed by whether the particular context at hand is best served by one or the other. Consider the schematic below that compares a highly interpretable (but less flexible) method, the linear model, to a more flexible (but less interpretable) “black box” approach (such as support vector machines or boosted regression trees):

In a factory context, we may be interested simply in predicting outcomes of the manufacturing process; e.g., whether the next drive off the line will fail a given test. In that case we are interested primarily in outcome prediction and would lean toward a more flexible method such as, say, support vector machines or boosted regression trees. On the other hand, we might be interested in modeling the individual contributions of various inputs to the likelihood of drive failure; in such a case we’d favor interpretability over flexibility and might choose to apply linear models (e.g., least squares with subset selection) or tree-based methods.

Finally, even as tools get smarter, we must remain measured in our expectations regarding the wonderful things they might help us learn. In essence, we would be wise to mind the caution expressed by John Tukey2, the father of modern exploratory data analysis:

The combination of some data and an aching desire for an answer does not ensure that a reasonable answer can be extracted from a given body of data.

1See, for example, James, G., Witten, D., Hastie, T., & Tibshirani, R. (2013). An Introduction to Statistical Learning with Applications in R, pp. 24-26. New York: Springer

2Tukey, J. (1986). Sunset salvo. The American Statistician 40 (1). Retrieved April 15, 2014, from http://www.jstor.org/pss/2683137.

Authors: Kuo Liu, Ed Wiley, and Dan Lingenfelter