Cloud computing platforms are often comprised of highly fault-tolerant systems. Examples of such systems include systems that employ N-fold data replication, K out of N erasure coding and declustered-parity RAID. Data durability of such systems is quantified in terms of the Mean Time to Data Loss (MTTDL) or the probability of losing data (1 object for e.g.) in 1 year. Real-world testing of the durability values of these systems is a hard task requiring a large amount of testing time and data to analyze. Mathematical models come to the rescue here and allow for computing data durability values in these systems. It is extremely important that the durability of these systems is computed accurately and accounted for, since data loss is usually quite costly. Overestimating durability values can expose the system to data loss risk (a strict no-no) and underestimating this value will increase cost. This exercise hence boils down to an appropriate choice of a model to estimate data durability and is the central theme of this blog.

Two main quantities of interest in modeling data durability of storage systems are the Mean Time to Failure (MTTF) and Mean Time to Repair (MTTR) of individual hard drives. Decreasing MTTF and increasing MTTR (decreasing MTTF/MTTR ratio) generally hurt data durability. Traditional RAID systems (like RAID6) are bottlenecked during rebuilds by the speed of individual hard drives and hence MTTR increases with increasing hard drive capacity. Correlated hard drive failures, on the other hand, are tantamount to decreasing effective MTTF. Traditional durability models (as in Ref [1]) use a two-state Markov Chain model (healthy and failed respectively), with the failed state being reached when the number of hard drive failures exceeds the fault tolerance of the system (2 drive failures in case of RAID6). The analysis is carried out on one RAID group and divided by the number of RAID groups in the system for the system’s MTTDL value.

Two aspects are missing from the simple model in Ref [1]. Firstly, the model assumes the rebuild of drives occurs one at a time sequentially, while current systems exploit parallel rebuilds of the data lost into all remaining hard drives, thereby enhancing rebuild performance and durability. Secondly, the above-mentioned model misses the combinatorics part, wherein different fragments of the data are distributed randomly across all hard drives in the system and hence the fact that the number of lost drives exceeds the fault tolerance level doesn’t necessarily mean data loss. The first aspect of the parallel rebuild has been previously incorporated and an easy-to-use improved model was presented in Ref [2]. As for considering correlated failures of drives, an explicit assumption of the effects of correlated failures was used to improve on the model in Ref [1].

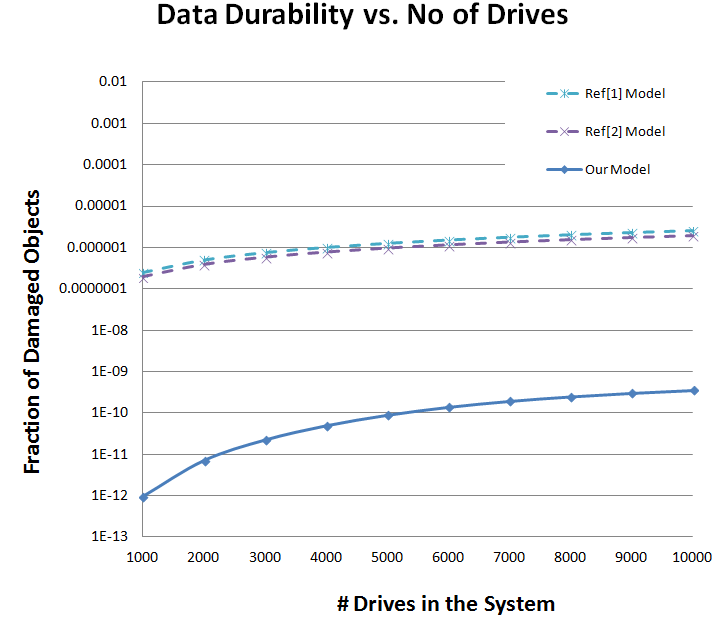

It is hence desirable that durability models incorporate all the above aspects, namely parallel rebuild, correlated failures and effects of placement strategies of fragments. A continuous time Markov Chain (CTMC) is a good modeling framework that enables incorporation of these aspects for fast computation of the durability of the system at hand. The following figure compares one such model developed by the Cloud Modeling and Data Analytics (CMDA) group with the simple and improved equations presented in Ref [1] and Ref [2] for a 14 out of 16 erasure coding scheme. The MTTF of drives is set to 1,400,000hrs, MTTR set to 24hrs and block size chosen to be 128MB. Data loss due to bit-rot (or URE’s) and correlation of failures have been excluded here and will be incorporated into future models. The results of the plot show the under-prediction of durability by the simple RAID-based models in this setting and highlight the need for improved models to correctly capture the durability of the high fault-tolerant systems in lieu of generalized simple models.

References

- “RAID: High-Performance, Reliable Secondary Storage”; Chen P M, Lee E K, Gibson G A, Katz R H and Patterson D A; ACM Computing Surveys; Vol .26 No. 2; June 1994

- “Reliability models for highly fault-tolerant storage systems”, Resch J and Volvovski I; Cleversafe, Inc, Oct 2013

Author: Ajaykumar Rajasekharan