Today, Google receives 2.3 million search queries per second. What will that number be in 2025—assuming exponential growth in search continues apace? 20 million searches per second? 50 million? A billon? Any number would only be a guess.

We know that by 2025 the global datasphere will be over 160 zettabytes—a 10-fold increase over today. But volume isn’t the only defining characteristic of big data. There are two more ‘Vs’ to consider:

- Velocity—especially the avalanche of real-time data from the Internet of Things (IoT) and the need to stay competitive in an on-demand world.

- Variety—especially unstructured data (social media feeds); machine-generated data (IoT devices); and life critical data feeds (medical monitoring, autonomous vehicles, smart grids).

Data, Data Everywhere

Of course, this explosion of data creates two obvious challenges: Analysis, and Management. The more data you have, the harder it is to analyze it, and to manage where it sits and how it’s accessed.

Organizations use Analytics to mine data for meaningful patterns, to understand operational dynamics, to improve performance, and to try to predict the future. Think of it as interpreting big data to answer big questions that can lead to big results. Typical questions include: What’s really happening in my market? Why is it happening? What will happen next?

Simply put, analytics’ goal is to turn data into information and information into action.

Traditionally a highly technical function, analytics has now migrated from the IT department. In their BI and Analytics Magic Quadrant, Gartner shows how modern analytics platforms are “defined by a self-contained architecture that enables nontechnical users to autonomously execute full-spectrum analytic workflows from data access, ingestion and preparation to interactive analysis, and the collaborative sharing of insights.” Phew.

I think what they mean is everybody in the organization can now perform their own analytics. Everybody can now access live, interactive data, regardless of the original source/repository. And everybody can now collaborate with their peers in real time, sharing insights that lead to better business decision. All in the cloud.

Of course, the democratization of analytics only adds to big data’s overhead. Not only do we have to manage the sheer volume, velocity, and variety of data—but now everybody expects access to anything, anywhere, any time. So, among other things, we need to talk about how we manage, store and access the magnitude of data.

How data is sorted and delivered

When it comes to big data Management, there are many options.

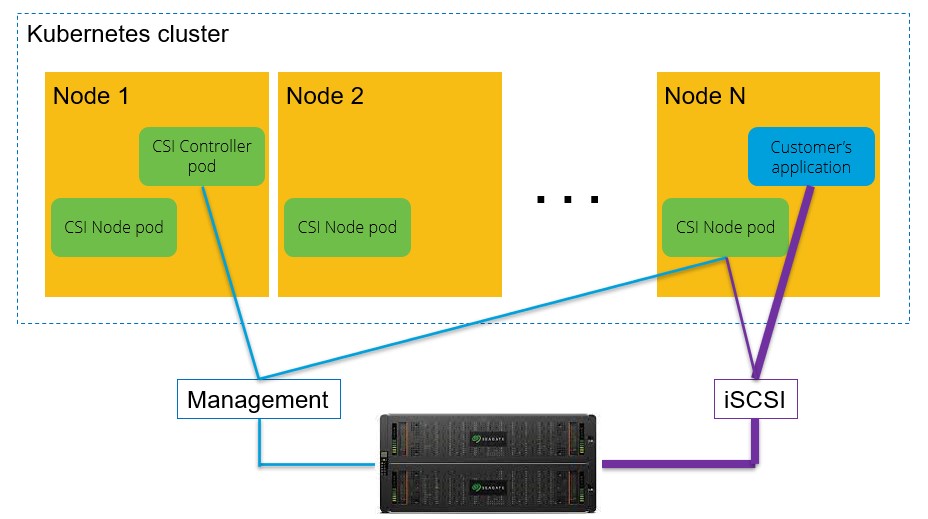

Organizations can choose on-premises (on-prem) storage, or they can outsource storage to a cloud services provider. Hybrid solutions include a combination of on-prem and cloud storage. These days, even companies that select on-prem for their primary data storage are likely to have back-up storage in the cloud—if only for disaster recovery.

Another important decision involves the critical storage component technology underpinning how data is sorted and delivered via storage management systems. Nowadays, most organizations need a blend of hard disk drives, solid state drives, and hybrid arrays that combine both in order to match each storage technology’s specific strengths to specific use-cases and data criteria.

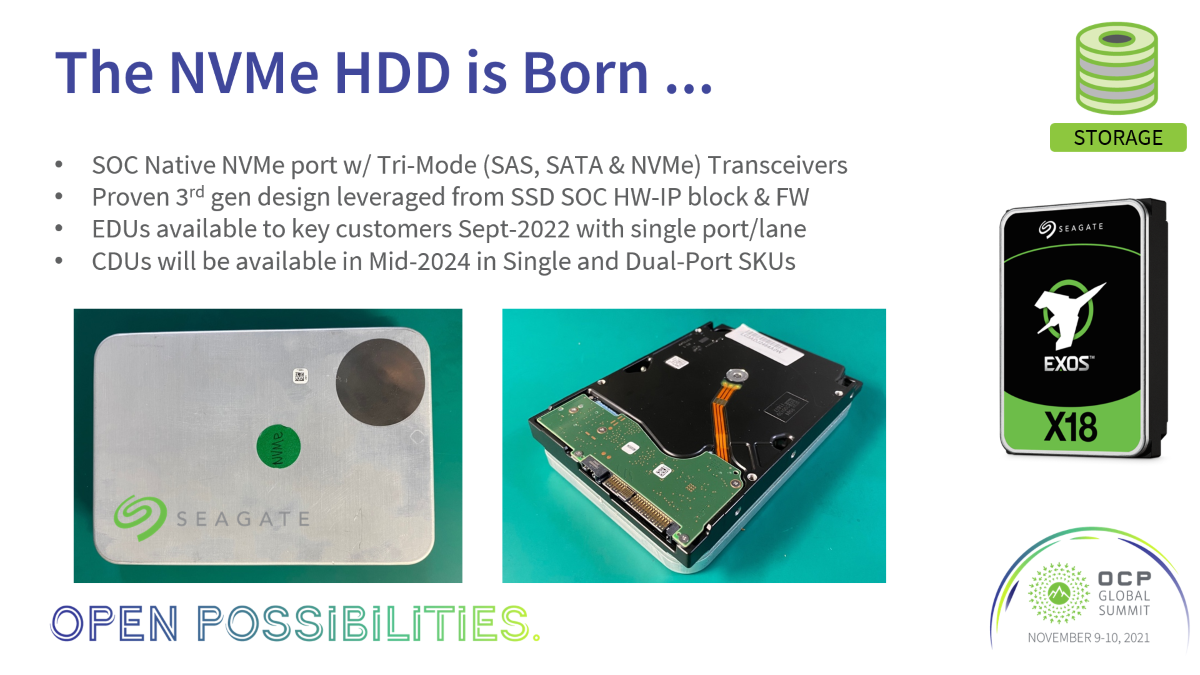

Hard disk drive storage technology is the workhorse for so-called asynchronous analytics operations, where data is first captured and stored, then analyzed later. Hard drives offer higher maximum capacities, higher common capacities, and much higher capacity per cost; manufacturers’ research and development engineers are constantly innovating to deliver more performance and capacity out of the drives, with the next major technological leap coming in 2018 with Seagate’s new HAMR (heat-assisted magnetic recording).

SSD storage (also called flash storage) is faster and therefore preferred for synchronous analytics—real-time (or near real-time) analytics utilizing “hot” (or frequently used) data. SSDs also run cooler and quieter than HDDs and tend to consume less power.

Because of the significantly different functional benefits of and cost profiles of the technologies, IT architects have to really understand their storage needs to find the optimum balance between price, performance, and reliability.

If a company is specifying a data management solution for their big data analytics, the two main factors to consider are frequency and speed. How frequently do they need analytics? How quickly do they need the results?

Analytics tends to deal with very large volumes of very small pieces of data. Depending on how synchronous (real-time) the analytics must be, there is very little tolerance for latency in a well-oiled analytics machine. Latency is an under-appreciated metric in the storage world. Folks tend to focus on IOPS (input/output operations per second) and throughput, or bandwidth. However, latency is a better way to measure analytics performance, as a measure of the time it takes for the system to process a single storage transaction or data request. Plus, it’s a component of both IOPS and throughput anyway.

Case Study: Big Data, Analytics, and Storage

An interview with Eric Hanson, Chief Information Officer, TrustCommerce

Q: Who is TrustCommerce?

Hanson: TrustCommerce provides a comprehensive suite of payment solutions with a focus on security, data protection, and risk mitigation. Featuring PCI Validated Point-to-Point Encryption (P2PE), tokenization, hosted payment pages, and EMV (chip and PIN, or chip and signature cards, using the Europay, MasterCard, and Visa standard). TrustCommerce solutions assist partners and clients with reducing the cost and complexity of PCI DSS compliance. We offer secure credit card payment processing to organizations in numerous consumer verticals—retail, insurance, parking, education, healthcare, and others.

Q: What makes TrustCommerce unique?

Hanson: We offer multiple payment modalities—card present devices, websites, mobile solutions, and traditional batch processing. Plus, we work with many of the credit card device manufacturers, third-party POS (point-of-sale) vendors, and back-end processors to glue the whole network together. So, if you’re a merchant, we can offer a wide variety of solutions to meet your precise needs.

Q: What’s the role of analytics at TrustCommerce?

Hanson: We’re a transactional company, so we mainly work with very large volumes of highly normalized data—well over 100 million transactions a year. So, the analytics we most care about are volume, throughput, and lag. However, aside from the sheer volume and the fact that it’s sensitive consumer and merchant financial data, we also have to deal with a slew of regulations and compliance requirements for all the different industry verticals—all in real time.

We also run sophisticated analytics on our own data—our systems and processes—to optimize our performance. That’s how we stay competitive and continue to deliver service to our clients.

Of course, we also run analytics for our clients—the merchants. We can go back and look at transactions by brand, by cardholder, by time—and like everything else, we’re constantly looking at the balance of cost versus performance.

Q: Do you have your own data centers?

Hanson: We have our own hardware in (mainly) co-located data center facilities. Active and archived data is continually updated and stored in geographically separated facilities.

Q: When you specify storage solutions, what do you look for?

Hanson: We manage a tremendous amount of sensitive data—and we have to ensure its availability and integrity. We strive for sub-second turnaround on transactions, all the way through to the back-end bank networks. But it all comes back to the data—which means bulletproof storage is critical.

With storage, we require speed, reliability, and redundancy. Any interruption to the process is basically lost revenue for our clients. Service disruption means they can’t take payments—it’s that simple.

You usually want a mix of hard drives and SSD—maybe some hybrids. Solid state disks are expensive, but the performance is outstanding. Anywhere we want to minimize potential interruptions to throughput, we look at SSD.

Of course, there’s more to storage than the disks. I can have the fastest disk in the world, but it won’t actually perform that fast if the backplane can’t keep up. And we also use simple but effective data management techniques like partitioning to optimize the overall process. In addition, TrustCommerce solutions are hosted in geographically separated, independent, active-active redundant facilities. Client- and server-side availability switching (failover and load-balancing) is implemented to ensure maximum product and service availability.

Q: How important is data management to your operation?

Hanson: The right storage decisions are key. That’s why I appreciate Seagate’s wide range of technology and platform choices. It allows me to pick the best storage option for a particular set of requirements, giving our customers exactly what they need.

Balancing what those needs are, we consider basic things like the amount of data we expect to handle, and how we grow intelligently with an eye on our legacy storage. Today, I always want a mix of storage solutions—hard drives and SSD—whatever is the right drive for the right application. Like many organizations, our infrastructure is multi-tiered and designed to meet the varying needs applications have for performance, availability and reliability. We also look at capacity, density and of course, cost.

—

Big data will only continue to grow thanks to the enormous demands of advanced analytics, the Internet of Things, social media, cognitive computing, and a dozen other datasphere phenomena. But as TrustCommerce’s Eric Hanson notes, the foundation for the growth of big data must be solid—and that always brings us back to making thoughtful well-researched decisions about how to deploy storage. Where are you going to store the data? How are you going to store the data? How will you balance storage performance, reliability, and cost?

Ask and expect your storage provider to help you answer those questions.

For more information about Seagate’s storage solutions for Analytics applications, click here.