Most consumers are skeptical when they see a manufacturer whipping out grandiose performance claims. And for good reason. The manufacturer could be stretching the truth, twisting the results, or just being downright misleading. From this distrust grew demand for 3rd-party writers to review products, test claims and provide an unbiased analysis of the devices performance and other capabilities — as consumers would experience themselves.

Who can really claim to be an SSD benchmarking expert?

Solid state drive (SSD) technology is still relatively new in the computer industry, and in many ways SSDs are profoundly different from hard disk drives — perhaps most notably, in the way they record data, to a NAND cell rather than on spinning media. Because of differences in their operation, SSDs have to be tested in ways that are not necessarily obvious.

Can anyone who simply runs a benchmark application claim to be an expert? I would say not. Just as anyone sitting behind the wheel of a car is not necessarily an expert driver. The problem is that it is hard to determine the thoroughness and expertise of an SSD reviewer. Does the author really understand the details behind the technology to run adequate tests and analyze the results?

Can experts present bad data?

Maybe it is obvious, but of course experts can be wrong, especially when they are self-proclaimed mavens without deep experience in the technology they cover. At a minimum, you can generally count on them to act in good faith that is, to not be intentionally misleading — but they can easily be misinformed (for instance, by manufacturers) and perpetuate the misinformation. Whats more, some reviewers are pressured to do a cursory analysis of an SSD as they crank through countless product evaluations under unremitting deadlines — a crush that can cause oversights in telling aspects of a drives performance. In any case, it is not good to rely on bad data no matter the intention.

What makes for a thorough SSD review?

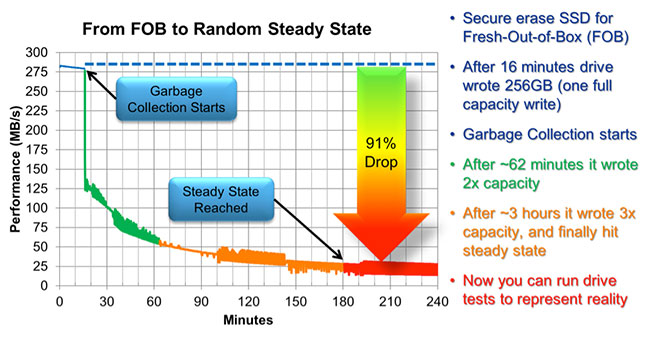

Some reviewers have gone to great lengths to ensure their SSD analysis is extremely detailed and represents a real-world environment and performance. These reviewers will generally talk about how their analysis simulates a true user or server environment. The trouble can begin if a reviewer doesn’t recognize normal operation of an SSD in its own environment. With SSDs, normal is when garbage collection is operating, which greatly impacts overall performance. Its important for reviewers to recognize that, with a new SSD, garbage collection is inactive until at least one full physical capacity of data has been sequentially written to the device. For example, with a 256GB SSD, 256GB of data must be written to trigger garbage collecting. At that point, garbage collection is ongoing, the drive has reached its steady-state performance, and the device is ready for evaluation. Random writes are another story, requiring up to three passes (full-capacity writes) randomly written to the SSD before the steady-state performance level shown below is reached.

You can see that running only a few minutes of random write tests on this SSD logs performance of over 275 MB/s. However, once garbage collection starts, performance plunges and then takes up to 3 hours before the true performance of 25 MB/s (a 90% drop) is finally evident — a phenomenon that often is not communicated clearly in reviews nor widely understood.

Good benchmarkers will discuss how their review factors in both garbage collection preparation and steady-state performance testing. Test results that purportedly achieve steady state in less time than in the example above are unlikely to reflect real-world performance. This is all part of what is called SSD preconditioning, but keep in mind that different tests require different steps for preconditioning.

For additional information on this topic, you can review my presentation from Flash Memory Summit 2013 on Dont let your favorite benchmarks lie to you.

Kent – This article leaves me with no understanding of how TRIM fits in? I thought that was ‘periodic garbage collection’?

Your post here leaves me with the impression that drives will ‘reach saturation’ and stay there forever?

I thought that after a full TRIM, the SSD was effectively ‘FOB’ (Fresh Out of Box’), and that ‘garbage collection’ was an ongoing background process?

Your post here leaves me to conclude ‘SSD performance is a complete joke’? I’m sure that’s not what you intended.

Thanks for your great questions. I cover TRIM in great detail in my two other articles Did you know HDDs do not have a Delete command? and Can data reduction technology substitute for TRIM in an SSD and drain the invalid data away?

In summary, TRIM is a tool the operating system uses to tell the SSD certain information is no longer needed. TRIM spares the SSD from having to save deleted data during garbage collection. The testing method I describe focuses on filling an SSD to capacity so you can see how all SSDs operate in that state. You can also set up the tests that use an SSD with less than 100% capacity. The saturation is relative to the over provisioning. TRIM allows the SSD to free up space held by invalid data. If you save only 80GB of data on a 120GB SSD that supports TRIM, then you will have 40GB of dynamic over provisioning to be combined automatically with the manufacturers original over provisioning of about 16GB. That extra over provisioning greatly improves performance, which I discuss in my blog Gassing up your SSD.

Yes, when the operating system completely TRIMs the SSD, you effectively get an FOB state. Of course, testing the drive immediately after TRIM is complete only simulates performance since no user data is stored on the SSD. Is that ever a real situation? Not with any computer I have seen.

All SSDs have garbage collection and the biggest factor affecting speed is how much over provisioning space is available both factory-set and dynamic from TRIM.

I will post a blog in a few weeks on foreground and background garbage collection to provide more insights into that aspect of the process.

As to your comment on SSD speed, my point is you have to pay attention to the test details to know if the results are really indicative of real-world performance. The biggest factor is not properly setting up the garbage collection process.

* Gassing up your SSD

* Data reduction technology and TRIM

* SSDs need TRIM

I look forward to the upcoming posts. TIA.

Certainly your underlying point that ‘benchmarks in general can be deceiving’ is true, whether for disks or other components. Personally, I take them as ‘comparative.’ Test A against devices 1,2 & 3 can be a basis for evaluation.

“Its important for reviewers to recognize that, with a new SSD, garbage collection is inactive until at least one full physical capacity of data has been sequentially written to the device,” as opposed to TRIM, which is fired by the OS, right?

IOW, I envision TRIM as continuously (OK, intermittently, using spare system time) to keep the SSD somewhere between pure 100% FOB and 100% saturation. This would be a ‘normal operating state.’ Saturation, as in your graph in red, would not be. So what is the point of testing in that condition (for the typical user, not the lab rat)?

Does your post above imply ‘a steady, never-ending stream of writes’ until 100 % of the SSD is filled, OP is all used up, and write amplification sets in, etc’? That’s not going to happen in the real world.

By “normal operation of an SSD in its own environment,” aren’t you describing a status that does not occur in real life? An SSD exists in a world with an OS, not by itself. That OS is reading, writing, and TRIM’ing it intermittently.

“With SSDs, ‘normal’ is when garbage collection is operating” and ‘when TRIM is active, and there is sufficient OP that garbage collection can wait. No?

I guess my confusion is the difference between ‘garbage collection’ and TRIM. Which is probably in your next article 🙂

BTW, I’m not trying to be dense. I just have a gift for it.

I think your questions would be addressed by my other TRIM blogs mentioned above. TRIM is sent by the operating system, but only after the OS or user deletes data. TRIM is not sent when the OS or user directly overwrites or updates previous data.

My presentation from the Flash Memory Summit linked above goes into detail about how differently the performance can be before and after garbage collection starts. You are correct that performance is higher if you have an SSD with only 10% capacity filled. However, at the cost of flash memory today, SSDs are typically pretty fully loaded, which is why the benchmarks need to show the performance when it is full.

I guess I would argue the relevance of this statement:

“However, once garbage collection starts, performance plunges and then takes up to 3 hours before the true performance of 25 MB/s (a 90% drop) is finally evident.”

I think I understand the scenario – doing something like a secure erase, which is a continuous stream of 0’s or 1’s from bit 0 to bit (last) of the disk will show the performance drop you indicate in red, after, as you show, a period of time (~17 minutes degradation starts to 3 hours worst case red starts, in your graph). No TRIM, garbage collection competing with new writes.

However, that is a condition that will never exist for a user, and I don’t see the relevance of testing it for a consumer review.

I think there is confusion over how secure erase is working here. Let me clarify that. Secure erase on an SSD will simply erase the flash map lookup information (Flash Translation Layer FTL). It is like a Super TRIM where all data is no longer valid and every block has nothing useful to garbage collect (move to new blocks). You described a more advanced form sometimes called Secure erase with overwrite. Its used in high-security and military environments that need to ensure data is unrecoverable after being erased.

My blog and discussion above references the standard secure erase. The graph in my blog shows performance of random data writes from a user, not the secure erase, which was completed before the graph starts. You can see that write performance drops significantly once garbage collection kicks in, after one full capacity write (blue line). After the second full capacity write (green line), performance is much lower. Then after the third full capacity write (orange line), the drive finally hits steady state (red line).

The red line reflects the lifetime performance level of that SSD for random writes if you keep it at full capacity with user data. TRIM has no impact on an SSD that stores full capacity.

Only when you store less than full capacity does the steady state for random writes perform higher than the level shown in red. The reason is directly related to the over provisioning. When the user stores less than 100% capacity, the SSD automatically converts the free space to dynamic over provisioning. That is covered in the Gassing up your SSD blog linked above and the Flash Memory Summit presentation in that blog.

I showed only random writes above. Sequential writes have a higher steady state performance level than random writes. I have images of that in the original Flash Memory Summit presentation linked at the end of this blog.

I hope I have clarified the relevance and importance of this information to consumer SSD reviews. I do recommend reviewing all my prior blogs and the many linked Flash Memory Summit presentations in those blogs. They provide significant background and shed light on many SSD mysteries.

Thanks again. Your statement “The red line reflects the lifetime performance level of that SSD for random writes if you keep it at full capacity with user data” clarifies it for me.

OK, understood. I can see that as relevant to a consumer who indeed keeps the drive ‘slam full,’ with insufficient free space and OP. Along side other tests, as is common now, showing ‘disk less than full’ scenarios. Such as in the first 16 minutes of your graph above.

“TRIM has no impact on an SSD that stores full capacity.” Understood. It can’t. No empty blocks to inform the drive about.

Thanks again, Kent. It’s pretty remarkable for someone like me to have access to a conversation with someone like you.

Bottom line for me can be reduced to ‘I’ll be freaking amazed with my new SSD.’ Especially since ‘Brand X’ comes in a 1TB configuration, with included software to use 1GB of system DRAM as a write cache RAM disk (insane benchmarks).

I look forward to understanding garbage collection better in your future posts, especially as re: when it runs in ‘normal use patterns’, etc. And the relation to TRIM, especially as re: run time. As I understand it today, TRIM basically ‘updates the map’ the GC will be guided by, as far as ‘LBA’s that can be ignored in GC’? And the relation of all that to wear leveling.

I’m just trying to understand the details. I do stupid crazy things with my machine. Like carrying 4 or 5 versions of Visual Studio and SQL Server and Office. As a contract developer, if a client says ‘I want this project done in VS 2005 with SQL 2012, and Office 2010, because that’s what we have here,’ I have to be able to do it.

I keep my ‘four most commonly used’ VM’s on an SSD now, including a 2008R2 server VM online, and maybe 30 VM’s on a spinner for storage. Like Windows ME, and Windows 7 built in Portuguese. I had to build those for testing certain client issues. Plus Linux flavors, Apache servers, etc. I find the SSD just about compensates for ‘Virtual machine overhead penalty,’ and makes them on SSD about as fast as my base machine on spinner. The new 1TB SSD will be my new base C drive, and also hold the ‘main 4 VM’s.’ Then my 128GB SSD can find new uses elsewhere. So I want to make sure I understand how to set things up best. Space will not be an issue with 1TB nominal.

So am I right that you will get better performance from an SSD if you buy one that has significantly higher capacity than you actually need rather than one which you almost fill?

Bingo! The down side is you pay for the flash that you dont use, so it is important you balance the cost of the extra flash with your improved performance.