Artificial intelligence (AI) has rapidly evolved to the point where it plays an integral role in numerous sectors, from healthcare to finance and beyond. At the heart of AI’s success is the ability to process massive datasets in ways that produce reliable results.

It’s a given that winning companies want to use AI or already use it. But they don’t just focus on implementing AI—they are after trustworthy AI models, processes, and results. They need AI that they can trust.

One critical process that enables the development of AI models is checkpointing. This primer explains what checkpointing is, how it fits into AI workloads, and why it’s essential for building trustworthy AI—that is, AI data workflows that use dependable inputs and generate reliable insights.

What is checkpointing?

Checkpointing is the process of saving the state of an AI model at specific, short intervals during its training. AI models are trained on large datasets through iterative processes that can take anywhere from minutes to months. The duration of a model’s training depends on the complexity of the model, the size of the dataset, and the computational power available. During this time, models are fed data, parameters are adjusted, and the system learns how to predict outcomes based on the information it processes.

Checkpoints act like snapshots of the model’s current state—its data, parameters, and settings—at many points during training. Saved to storage devices every minute to few minutes, the snapshots allow developers to retain a record of the model's progression and to avoid losing valuable work due to unexpected interruptions.

Key benefits of checkpointing.

- Power protection. One of the most immediate and practical benefits of checkpointing is safeguarding training jobs from system failures, power outages, or crashes. If an AI model has been running for days and the system experiences a failure, starting from scratch would be an enormous waste of time and resources. Checkpoints ensure that the model can resume from the last saved state, eliminating the need to repeat training from the beginning. This is especially valuable for AI models that may take weeks or even months to complete their training.

- Model improvement and optimization. Checkpointing doesn’t just protect against failures—it also enables fine-tuning and optimization. AI developers often experiment with various parameters, datasets, and configurations to improve the model's accuracy and efficiency. By saving checkpoints throughout the training process, developers can analyze past states, track the model’s progression, and adjust parameters to take the training in a different direction. They may tweak graphics processing units (GPUs) settings, alter data inputs, or change the model architecture. Checkpoints provide a way to compare different runs and identify where changes improve or degrade performance. As a result, developers can optimize AI training and create more robust models.

- Legal compliance and intellectual property protection. As AI regulations evolve globally, organizations are increasingly required to maintain records of how AI models are trained to comply with legal frameworks and ensure the protection of intellectual property (IP). Checkpointing allows companies to demonstrate compliance by providing a transparent record of the data and methodologies used to train their models. This helps safeguard against legal challenges and ensures that the training process can be audited, should the need arise. In addition, saving checkpoint data protects the IP involved in model training, such as proprietary datasets or algorithms.

- Building trust and ensuring transparency. The importance of transparency in AI systems cannot be overstated, especially as AI continues to be integrated into decision-making processes in industries such as healthcare, finance, and autonomous vehicles. One of the keys to building trustworthy AI is ensuring that the model's decisions can be explained and traced back to specific data inputs and processing steps. Checkpointing contributes to this transparency by providing a record of the model’s state at each stage of training. These saved states allow developers and stakeholders to trace the progression of the model, verify that its outputs are consistent with the data it was trained on, and ensure that there is accountability in how decisions are made.

As AI applications expand beyond traditional data centers, they increasingly call for both high capacity and high performance. Whether in the cloud or on the premises, AI workflows rely on storage solutions that deliver both massive capacity and high performance, both of which are critical in supporting checkpointing.

In AI data centers, processors—such as GPUs, central processing units (CPUs), and tensor processing units (TPUs)—are tightly coupled with high-performance memory and solid-state drives (SSDs) to form powerful compute engines. These configurations manage the heavy data loads involved in training, and they offer the quick access needed for saving checkpoints in real-time as models progress.

As data flows through these systems, checkpoints and other critical information are retained in networked storage clusters or object stores. Built predominantly on mass-capacity hard drives, these clusters ensure that checkpoints can be preserved over long periods, supporting the needs for both scalability and compliance. This layered storage infrastructure enables checkpointing to work efficiently, balancing quick access with long-term data retention.

How checkpointing works in practice.

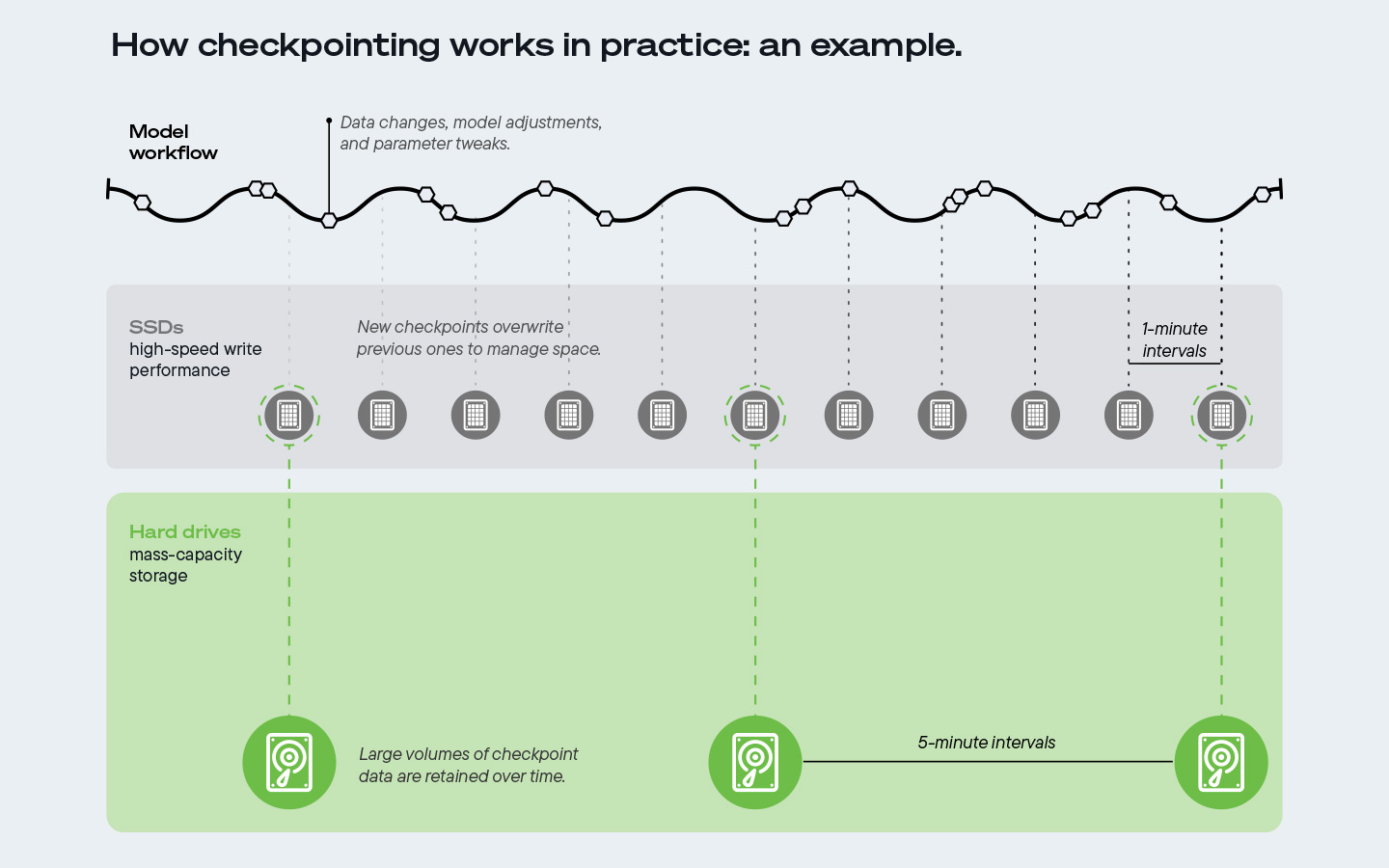

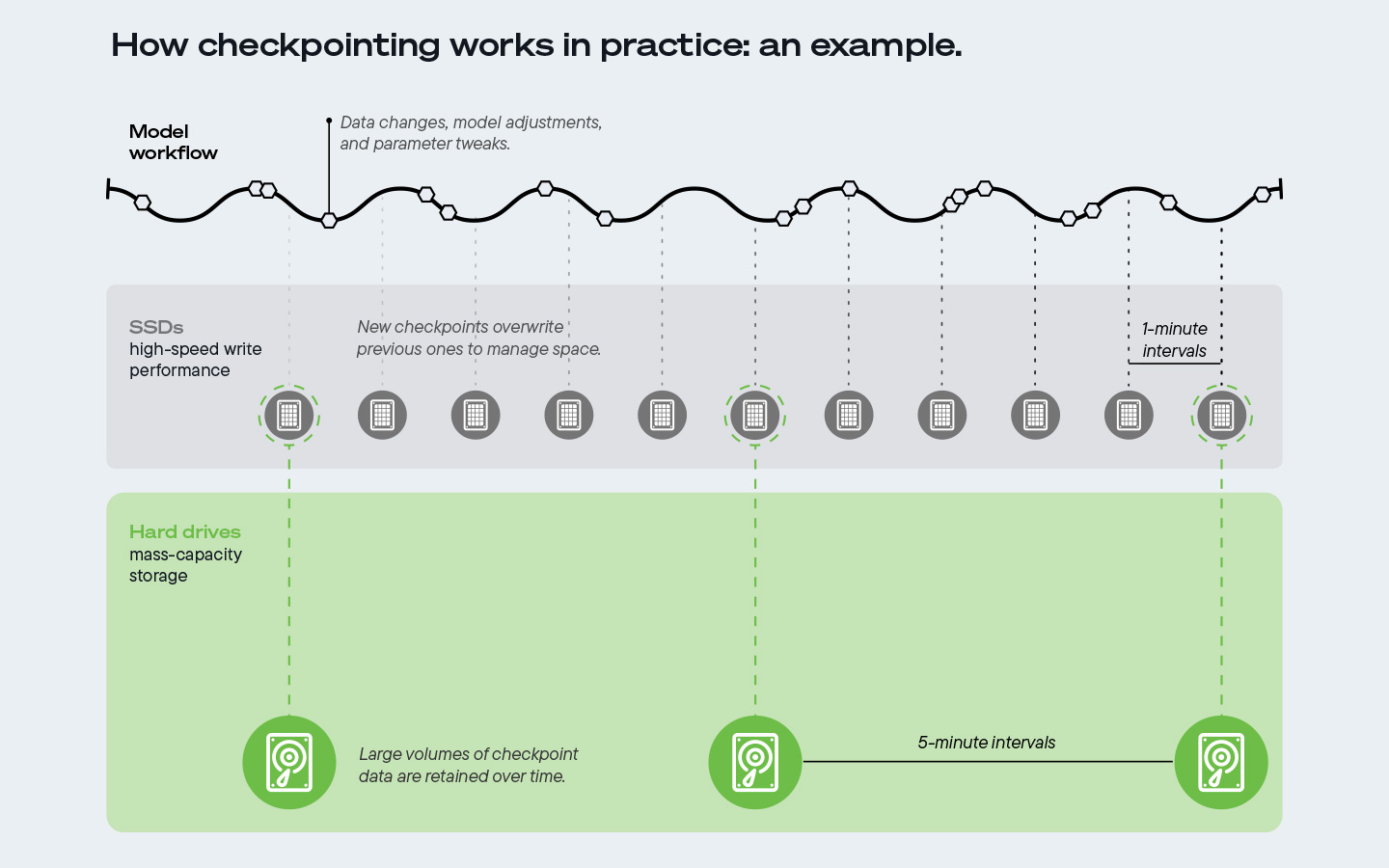

Checkpointing typically happens at regular intervals—ranging from every minute to a few minutes, depending on the complexity and needs of the training job.

A common practice is to write checkpoints every minute or so to SSDs, which offer high-speed write performance allowing rapid data access during active training. Because SSDs aren’t cost-effective for long-term mass-capacity storage, new checkpoints overwrite the previous ones to manage space.

Since AI training jobs often generate massive amounts of data over extended periods, mass-capacity storage is essential. As an example, every five minutes or so, AI developers save checkpoints to hard drives, which play a critical role in ensuring that large volumes of checkpoint data are retained over time. With a cost-per-TB ratio of over 6:1 on average compared to SSDs, hard drives provide the most scalable, economical solution and are the only practical option for the large-scale data retention required to ensure AI is trustworthy.

Additionally, unlike SSDs, which degrade with frequent write cycles due to the wear on flash memory cells, hard drives use magnetic storage that can endure continuous use without loss of integrity. This durability enables hard drives to maintain data reliability over the long term, allowing organizations to retain checkpoints indefinitely and to revisit and analyze past training runs long after the model has been deployed, supporting robust AI development and compliance needs.

The infinite AI data loop and its role in AI workflows.

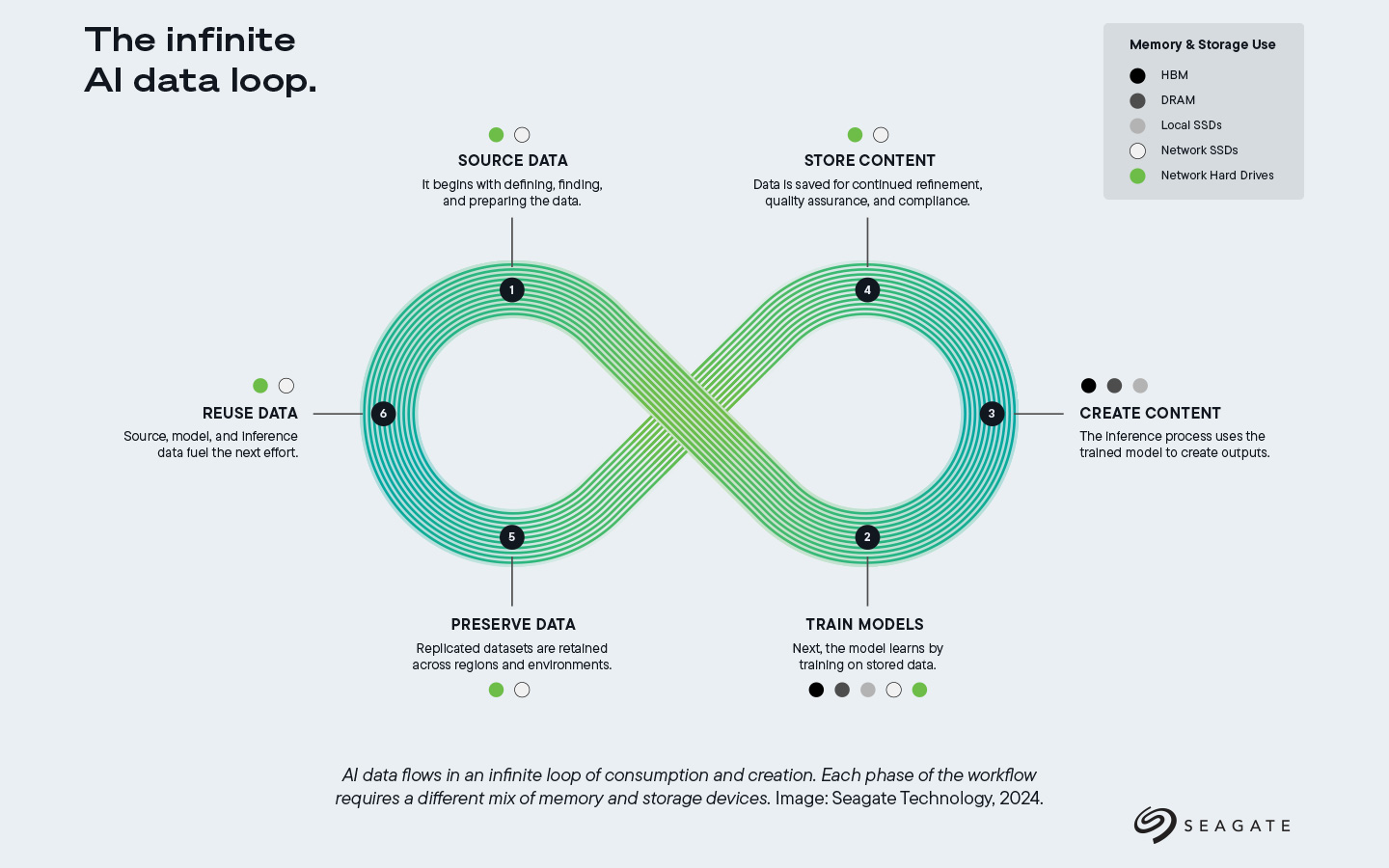

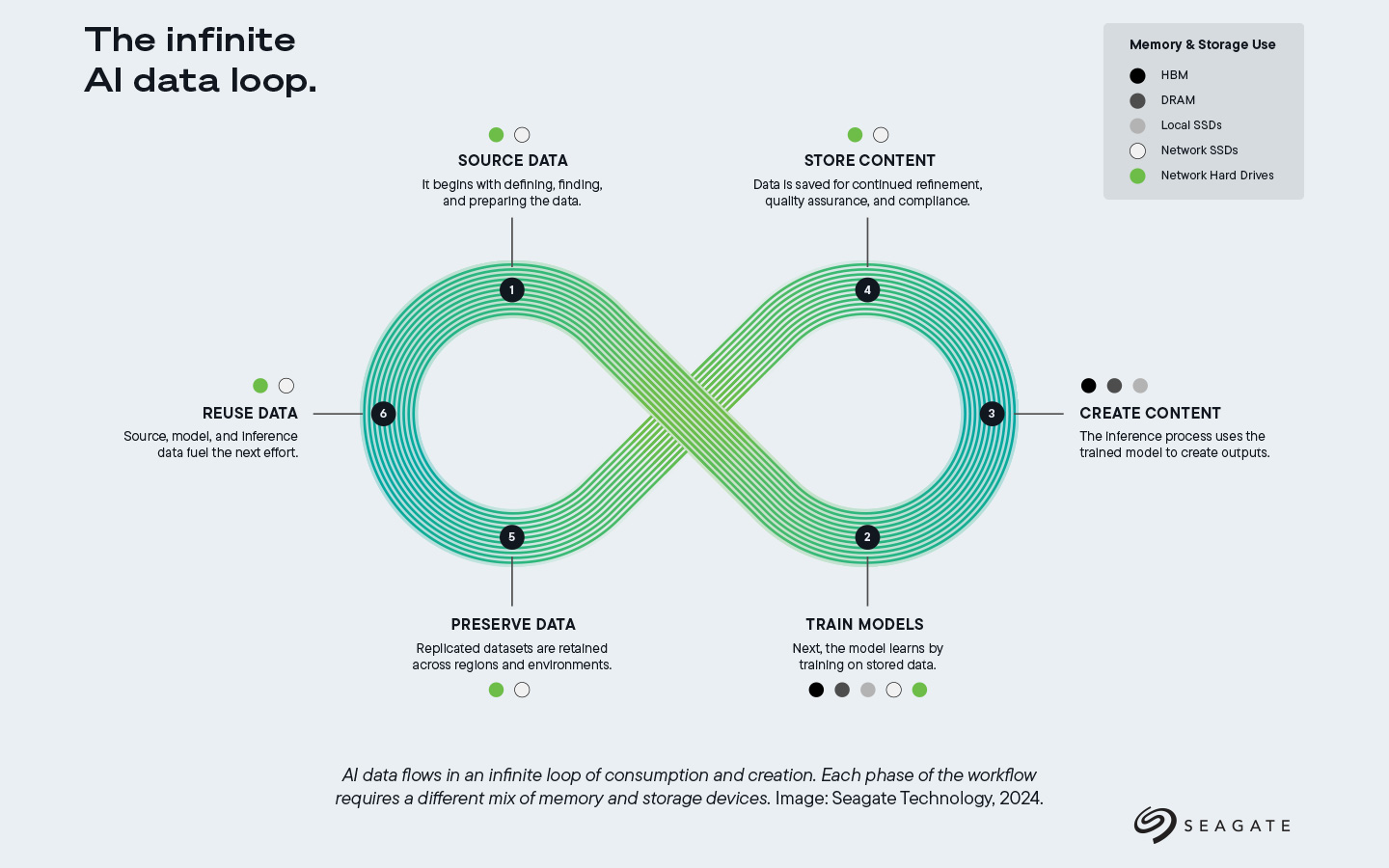

AI development can be understood as a cyclical process often referred to as the AI infinite loop, which emphasizes the continuous interaction between various stages of data sourcing, model training, content creation, content storage, data preservation, and reuse. This cycle ensures that AI systems improve iteratively over time. In this loop, data fuels AI models, and outputs from one stage become inputs for subsequent stages, leading to ongoing, iterative refinement of the models.

The process begins with source data, where raw datasets are gathered and prepared for training. Once sourced, the data is used to train models, which is where checkpointing comes into play. As described earlier, checkpointing serves as a safeguard during model training, ensuring that AI developers can save progress, avoid losing work due to interruptions, and optimize model development. After the models are trained, they are used to create content, such as performing inference tasks like generating images or analyzing text. These outputs are then stored for future use, compliance, and quality assurance, before the data is eventually preserved and reused to fuel the next iteration of the AI model.

In this infinite loop, checkpointing is an essential element, specifically within the model training phase. By storing model states and preserving data throughout the loop, AI systems can become more reliable, transparent, and trustworthy with each cycle.

Why hard drives are essential for AI checkpointing.

The storage demands of AI systems are immense, and as models become larger and more complex, the need for scalable, cost-efficient storage grows. Hard drives, particularly in data center architectures, serve as the backbone of AI checkpoint storage for several reasons:

- Scalability. AI models can generate petabytes of data, and thanks to groundbreaking areal density advances, hard drives offer the necessary capacity to store checkpoints from these large-scale training jobs over the long term.

- Cost efficiency. Compared to SSDs, hard drives provide a much lower cost per terabyte (at a 6:1 ratio), making them a more viable solution for storing massive datasets and checkpoints without incurring prohibitive costs.

- Power efficiency and sustainability. Hard drives consume 4× less operating power per terabyte compared to SSDs, resulting in significant energy savings. Additionally, they boast 10× lower embodied carbon per terabyte, making them a more sustainable choice for large-scale AI checkpoint storage in data centers.

- Longevity. Hard drives are designed for long-term data retention, ensuring that checkpoint data remains accessible for as long as needed. This is critical for ensuring that AI models can be revisited, verified, and improved over time.

As we noted earlier, in some AI workloads, checkpoints are written every minute to SSDs, but only every fifth checkpoint is pushed to hard drives for long-term retention. This hybrid approach optimizes both speed and storage efficiency. SSDs handle immediate performance needs, while hard drives retain the data needed for compliance, transparency, and long-term analysis.

The role of checkpoints in trustworthy AI.

In the broader context of AI development, the role of checkpoints is pivotal in ensuring that AI outputs are legitimate. “Trustworthy AI” refers to the ability to build systems that are not only accurate and efficient but also transparent, accountable, and explainable. AI models must be reliable and able to justify their outputs.

Ultimately, checkpoints provide the mechanism through which AI developers can “show their work.” By saving the model’s state at multiple points throughout the training process, checkpoints keep track of how decisions were made, verify the integrity of the model’s data and parameters, and identify any potential issues or inefficiencies that need correction.

Furthermore, checkpoints contribute to building trust by ensuring that AI systems can be audited. Regulatory frameworks, both present and future, demand that AI systems are explainable and that their decision-making processes are traceable. Checkpoints enable organizations to meet these demands by preserving detailed records of the model’s training process, data sources, and development paths.

Checkpointing is an essential tool in AI workloads, playing a critical role in protecting training jobs, optimizing models, and ensuring transparency and trustworthiness. As AI continues to advance and influence decision-making across industries, the need for scalable and cost-effective storage solutions has never been greater. Hard drives are central to supporting checkpointing processes, enabling organizations to store, access, and analyze the vast amounts of data generated during AI model training.

By leveraging checkpointing, AI developers can build models that are not only efficient but also trustworthy.