Seagate’s new smart manufacturing reference architecture spots anomalies in the supply chain, improving product quality

Seagate Technology, the world’s largest manufacturer of hard drives, is resolute when it comes to ensuring reliability.

Recently, Seagate’s engineers, along with collaborators from NVIDIA and Hewlett Packard Enterprise, implemented an artificial intelligence (AI) platform that eliminates inefficiencies and prevents anomalies from sneaking their way into products before they are made—on factory floors.

The smart manufacturing application boosts quality control of wafer images produced in the process of disk drive production. As this technology becomes incorporated, Seagate expects to see improved efficiency and quality. In one project, at a factory in Thailand, engineers estimate a 20% reduction in cleanroom investments, a 10% reduction in manufacturing throughput time, and up to a 300% return on investment.

Minding the Factory

What made smart manufacturing possible in the first place is the very thing that makes all kinds of smart ventures possible: the human mind—how it sees and makes sense of the world.

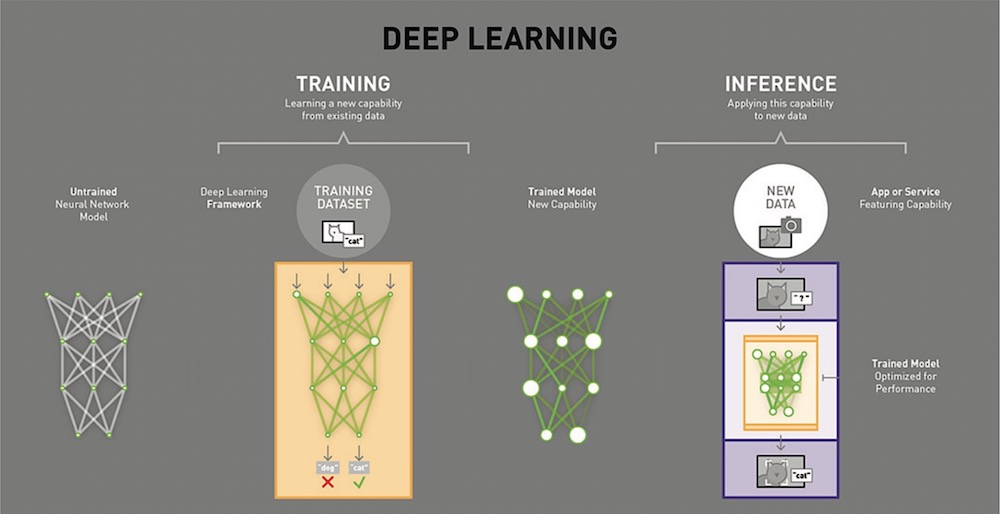

Human brains innately teach themselves to detect patterns and to take preemptive action based on what they observe. Think of it as a self-programming of sorts. Neural networking—the mind’s built-in knack for deploying pattern recognition and learning from it in order to prevent risk, harm, and anomalies—had long been an inspiration of programmers, engineers, and data scientists. For decades now, they’ve focused on teaching neural networking to algorithms.

In 1959, Stanford University electrical engineers Bernard Widrow and Marcian Hoff created the first successful neural network and applied it to create a filter that eliminated echoes on phone lines. The system, by the way, is still in commercial use today. The field stumbled a bit since then, with misaligned discoveries and expectations. But, over time, improvements in mathematics and in computing enabled progress.

Human brains innately teach themselves to detect patterns and to take preemptive action based on what they observe.

The next great breakthrough came in 2012, when University of Toronto computer scientists Geoffrey Hinton, Ilya Sutskever, and Alex Krizhevsky won the ImageNet Large Scale Visual Recognition Challenge. The project they submitted—a deep convolutional neural network architecture they called AlexNet, which is still in use today—beat the existing models by a 10.8% margin. The project used deep learning and graphic processing units to design image assessment software. ImageNet contains millions of images from thousands of different classes that picture categories such as dogs, cats, cars, etc.

“It was a watershed moment,” recalls Bruce King, a data science technologist who heads up the smart manufacturing platform project at Seagate. “People dreamed of a breakthrough like this for decades. That breakthrough is why today Seagate’s data science, IT, and factory engineering teams are able to deploy AI on our factory floors.”

The University of Toronto’s scientists’ successful application of neural networks relied on the part of artificial intelligence called deep learning, which “attempts to mimic the activity in layers of neurons in the neocortex, the wrinkly 80% of the brain where thinking occurs,” per Robert D. Hof writing in MIT Technology Review.

Deep learning, King says, was at the core of Seagate’s Project Athena, which started as a way to boost quality of Seagate’s manufacturing and gave rise to the reference architecture Seagate Edge RX. It’s the real-time edge compute cluster that allows Seagate to deploy machine learning capabilities on factory floors.

Rule-Based Methods vs Deep Learning

Precision manufacturing is intricate and painstakingly iterative. Seagate makes its products in factories that combined generate about 10 million images as part of an average day’s production. Disk drive heads are manufactured using a process somewhat similar to semiconductor wafer fabrication. The wafers get cut up into tiny read-write recording heads that get assembled into drives.

“The algorithms themselves learn from the data what the rules are and when they’re being violated. The engineers don’t have to program them in.”

King says that it takes months to produce the wafers and the process comprises about a thousand individual steps. Many of these steps are quality measurements, including various forms of image capture. When the pictures show a change in quality, the engineers must catch that.

State-of the-art, deep learning software can detect issues in these images more accurately than other, conventional, rule-based tools.

The difference when it comes new tools? “The algorithms themselves learn from the data what the rules are and when they’re being violated,” King says. “The engineers don’t have to program them in.” Does this mean that the engineers are superfluous? Not at all. The new deep learning tools do not replace them. Rather, they free them up to work on higher-level solutions.

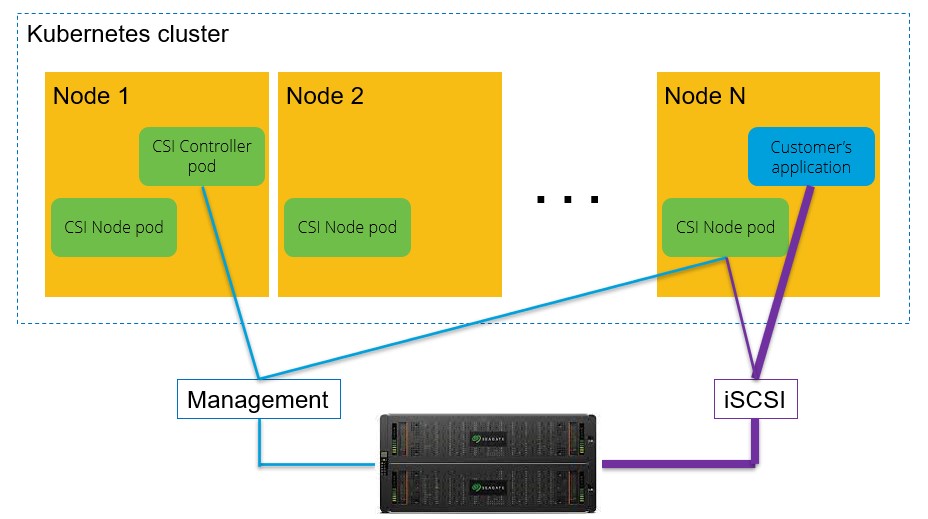

The reference architecture for Seagate Edge RX was enabled by Seagate’s knowledge of the IT infrastructure, its storage business expertise, its partnership with HPE, its continued research on deep learning with NVIDIA, and cluster management tools from Kubernetes and Docker.

In addition to neural networks, a development that led to the era of smart manufacturing is the rise of the graphics processing units (GPUs). Built by NVIDIA, the GPUs are ideal tools for smart calculations because they can do deep-learning math hundreds times faster than central processing units. According to Insight64’s head Nathan Brookwood, GPUs are “optimized for taking huge batches of data and performing the same operation over and over very quickly, unlike PC microprocessors, which tend to skip all over the place.”

“As an industry we all stand to gain by sharing our learnings and letting the ecosystem improve upon it.”

While Seagate’s implementation of deep learning is specific to the manufacturing process in the data storage industry, it is generally applicable to other types of processes, particularly those marked by the following characteristics:

- High-volume, high-precision, discrete manufacturing processes producing tools such as semiconductors, electronics, automotive parts, machine parts, etc.

- High-value manufacturing products using high-cost capital equipment

- Verticals generating large volumes of images that cannot be analyzed with traditional methods

- Anomaly detection in security, smart cities, and autonomous vehicles

- Highly complex manufacturing processes with many stages

- Automated manufacturing processes that can collect equipment, process, and inspection data

- Quality control and inspection-driven manufacturing processes

- Lengthy manufacturing processes

- Multisite global manufacturing

In fact, the reason Seagate’s Lyve Data Labs is sharing the Seagate Edge RX reference architecture is to enable others to adapt it as well.

“We at Seagate understand that as an industry we all stand to gain by sharing our learnings and letting the ecosystem improve upon it,” says Seagate’s senior director for growth verticals Rags Srinivasan. “This is why we are more than willing to share the architecture with others with similar needs.”

To learn more, check out the case study “Smart Manufacturing Moves From Autonomous to Intelligent” and the tech paper with a more in-depth look at the reference architecture “A Smart Manufacturing Reference Solution: How Deep Learning Can Boost Efficiency on Factory Floors.” Reach out with questions to lyvelabs@seagate.com.