The edge is near. Are you ready?

Every day, more devices are creating more data more often — and in more locations.

As a result, the world’s data is being managed in new places too. Data’s center of gravity is shifting from a few centralized cloud locations to a profusion of locations outside traditional datacenters.

Data will be managed closer to where the data is being created, and closer to where it provides value. Taken together, these new locations are called the edge. That’s the part of the network near the end users, where data is created by sensors, cameras, people with devices, and the entire Internet of Things (IoT).

When data is managed, analyzed and processed at the edge, its value can be understood and put to use more quickly.

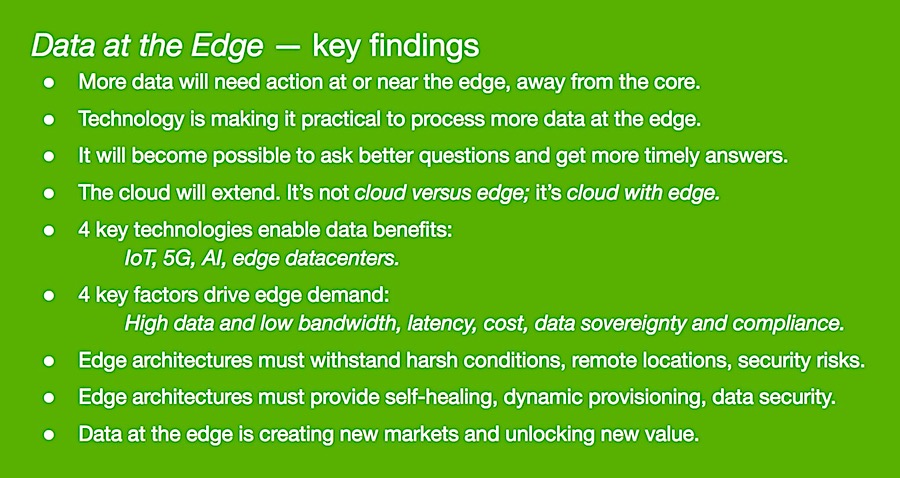

To help our partners and customers explore this shift, Seagate teamed up with a consortium of edge-computing companies to produce Data at the Edge, a new ebook about managing and activating information in a distributed world. The report also draws on research from the Seagate-sponsored Data Age 2025 study by IDC.

“The edge creates an emerging market, and everyone is trying to define what it means,” says Rags Srinivasan, senior director of growth verticals at Seagate. “We want to help readers better understand the edge, and to illustrate some of the data challenges businesses are facing today and what they should consider in managing their IT resources.”

More data needs action near the edge, away from the core

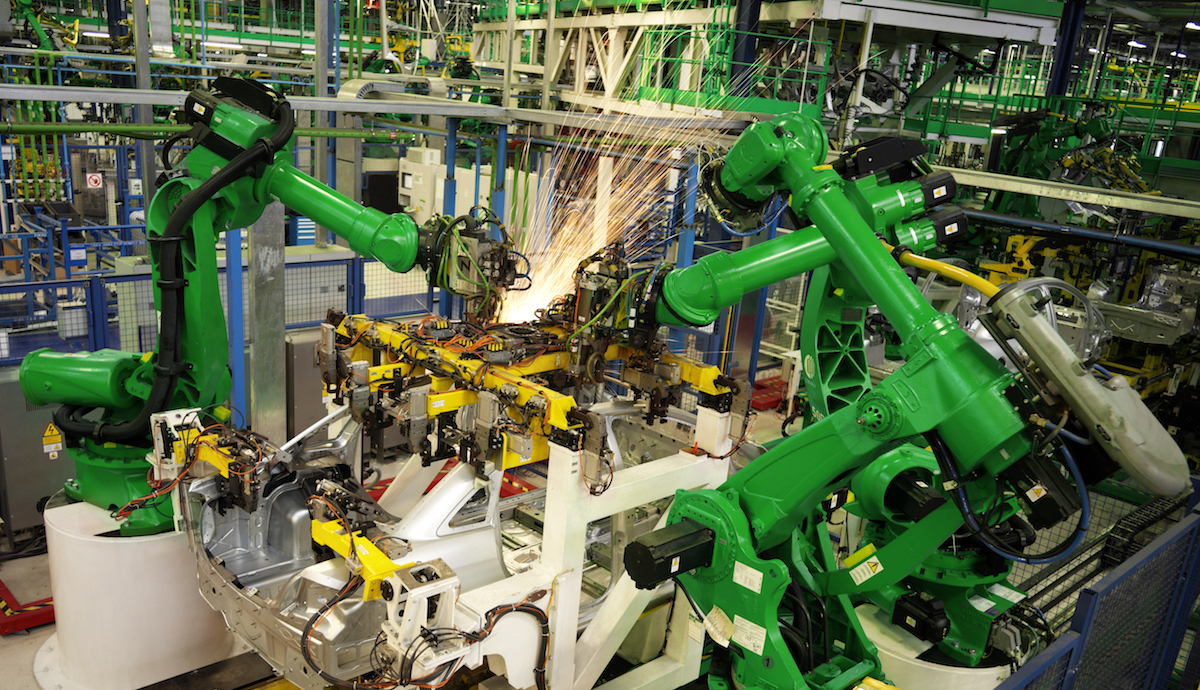

As billions of devices continue to come online, capturing and churning out zettabytes of data, today’s centralized cloud environments will need support from a new and robust IT architecture at the edge. Putting new compute, networking and storage resources in close proximity to the devices creating the data makes it possible to analyze data on the spot, delivering faster answers to important questions, such as how to improve factory robotics or whether an autonomous car should apply the brakes.

The edge can be found anywhere and everywhere, from factory floors to farms, on rooftops and on cell phone towers, and in all kinds of vehicles on land, sea and air. The edge is the outer boundary of the network — often hundreds of miles away from the nearest enterprise or cloud data center, and as close to the nearest data source as possible.

The rise of the edge will drive big demand for new and redesigned data management infrastructure. A typical smart factory, for example, will create about 5 petabytes of video data per day. A smart city with 1 million people could generate 200 petabytes of data per day, and an autonomous vehicle could produce 4 terabytes of data per day.

What will data at the edge mean for you?

What does the shift to data at the edge mean for you? How will this evolution impact the structure and function of existing core datacenters and hyperscale cloud datacenters?

With the huge buzz about new opportunities edge computing makes possible, some IT commentators have wondered if the prevailing cloud computing model will be entirely superseded by edge computing, because the edge is intrinsically more flexible and scalable across applications in every location where data is generated.

The Data at the Edge report explains that even as edge computing enables data to be leveraged more efficiently, the traditional datacenter infrastructure will remain essential. As massive amounts of data are created outside traditional data centers, the cloud will extend to the edge. It won’t be a “cloud versus edge” scenario; it will be “cloud with edge.”

“The future will be about Edge and cloud working together to help businesses make smarter decisions instantly and drive up productivity, efficiency and customer satisfaction,” says BS Teh, Seagate SVP of Global Sales and Sales Operations speaking recently with the Economic Times’ ETCIO.

The report includes an introduction from John Morris, VP and chief technology officer at Seagate. Morris notes that in the IT world, change is nothing new. In fact it’s essential. The key is to understand, embrace, and harness the new possibilities. “The way we use, analyze, and act upon data keeps changing,” says Morris. “It’s a reality for which every successful business must prepare.”

Every business is a data business

Through decades working at the frontlines of digital innovation, Morris has learned that every business is a data business. “Innovations in data management have paved the way for much more efficient ways of using information,” he says, “and data at the edge is no different.”

Data at the Edge illustrates how enterprises can begin now to devise ways to deliver untapped value from data in the new ecosystem. What does the edge mean for enterprises, cities, small businesses, and individual consumers? How does data at the edge enable us to work, play, live, commute, and leave the world a better place for future generations? What new opportunities for extracting value from data arise at the edge? These are the questions this report answers.

The report weaves in several examples of how the edge already is transforming global businesses and benefiting humanity. In Chile, an AI-powered, sensor-equipped irrigation system for cultivating blueberries is expected to reduce water usage by 70 percent compared to other irrigation methods. In Japan, robots are growing lettuce in factories with floor-to-ceiling stacks — an example of how edge-driven automation is helping to put food on the tables.

The edge is here now, and it’s in Seagate’s own factories

“In the report we wanted to share some of the challenges we’ve faced and solved in our own factory as well,” says Srinivasan. “Our factory floor is a good example of the edge, and we built out a solution to process data, apply machine learning and use edge analytics, and make quick decisions right there on the factory floor.”

Seagate produces millions of units per quarter, and billions of transducers per year. “This kind of production requires a heavily automated process, and the system’s being asked to make 20 to 30 decisions per second,” notes Srinivasan. “At that rate, you simply cannot wait for data that’s captured on the production line to be sent over to a centralized location, then processed, and then the decision sent back. We can’t stop the production line for it; we need to keep operating at very high throughput while also maintaining high quality. To do that, we need to keep decision making close to where the data is produced. That’s why we put our own image-analytics anomaly-detection solution on the factory floor. It cuts the latency from hundreds of milliseconds to less than 10 milliseconds.”

“We’ve included that and other examples in the report to illustrate that the edge is not far away,” he says. “It’s not even a year or two away — the edge is here.”

IT architects must learn the requirements of the edge

“We decided to put together this report to talk about what it means to process so much data at the edge,” says Srinivasan. “What do any one of the industries building out an edge-to-core architecture need to worry about? What’s the scale, what’s the complexity? When considering what solutions have worked in existing architectures like on-prem datacenters or cloud datacenters, what needs to be different? What do I need to know when I build for the edge? We want to help our ecosystem partners with some of the initial answers, but also to offer guidance on some of the key questions that they can start answering and we can answer jointly with them.”

Decision makers and IT architects working in many verticals will benefit from understanding the edge better — the people who are planning the future of content delivery networks, telecommunications, smart manufacturing, smart agriculture, autonomous vehicles and more.

“The people helping create these solutions go beyond traditional IT experts,” Srinivasan emphasizes. “They include the operational technology people who run the factories, the data scientists, the autonomous vehicles software teams, and the infrastructure managers working to develop private cloud and edge infrastructure for telecom.”

“Data at the edge of the network is creating new markets and unlocking new value,” says Srinivasan. “To keep growing, businesses need to take advantage of new edge-powered opportunities. Data at the edge can help solve some of the biggest challenges facing us today — one farm and one factory floor at a time.”

What’s driving demand for new edge computing architecture?

According to Srinivasan, through research and working with a variety of IT ecosystem leaders Seagate honed in on four main reasons edge is emerging as a major element in the IT 4.0 paradigm.

The report shows how these four key factors are driving demand for edge computing: latency; insufficient bandwidth for high data volumes; efficiency and cost; and data sovereignty and compliance regulations.

“Number one is latency,” says Srinivasan. “Given intrinsic physical constraints of IT and telecom infrastructure, it just takes so much time to move data from where it’s generated over to a centralized location; you can’t be the speed of light in this case. So latency is key in every decision about how to manage data, and some of the factors in making those decisions are measured in less than 10s of milliseconds — whereas the time it takes to send data to a centralized location and back could be on the order of 100 or 200 milliseconds.”

“Number two is the bandwidth issue,” he continues. “The volume of data in aggregate has shifted from exabyte to zettabyte scales. And it’s only continuing to increase now with the many new sensors we’re seeing — not just temperature, weather, vibration or other senses that collect small amounts of data, but cameras and radar and LIDAR and others that generate a large volume of data. And there will be many, many such sensors; 5G can locally support millions of sensors in a square kilometer — so there’s an enormous amount of data to be sent, but there’s not enough bandwidth to send all of it to centralized cloud datacenters.”

“Third is efficiency. Even if you did intend to send all possible data to a centralized location, the cost and complexity to build out a centralized architecture, to process so much data, in the Data Age era it’s simply not going to be manageable, compared to being able to distribute the time-intensive processing to the edge so it’s closer to the data source.”

“And the fourth reason,” Srinivasan concludes, “is the requirement that data is always treated in a way that complies with regulations and customer standards. When you’re dealing with data permissions and data security requirements, in many cases it simply may not be possible for you to send all data out of a particular region to be processed in a centralized location.”

What will IT architects do differently?

“We hope the report will help drive awareness that even though we all understand the existing IT infrastructure well, building and solving for the edge is different, and will need a different kind of thinking,” says Srinivasan.

“We hope the report will help drive awareness that even though we all understand the existing IT infrastructure well, building and solving for the edge is different, and will need a different kind of thinking,” says Srinivasan.

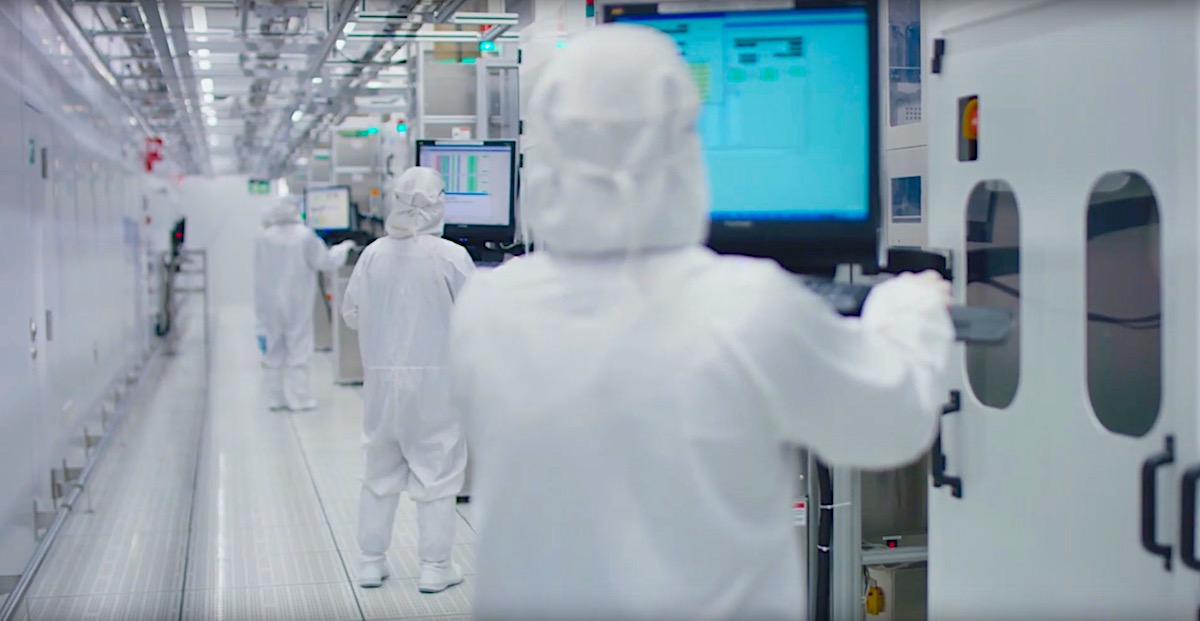

The mechanics of designing traditional datacenter infrastructure or a cloud datacenter are very different from architecture at the edge. Traditional datacenters have climate-controlled environments, continuous dual redundant power supplies, and often physical security systems including armed guards. They also have an army of IT personnel on site to manage them.

In contrast, says Srinivasan, “an edge datacenter could be on a telco tower, or in a barn. It may be exposed to the elements, and climate control may be a much bigger challenge.”

“It will have some level of physical security, but you can’t just rely on that,” he says. “You need to have data security built into the architecture so that data is secure even in cases of disaster or malfeasance. Even if a thief walks into an edge datacenter, opens a system and walks out with a storage module, that should be useless to them — they shouldn’t be able to get any data from it.”

And he notes that when edge systems are ubiquitous, there won’t be IT personnel who can quickly come in and fix issues whenever they arise. So edge systems need to be especially resilient; even if something happens, the datacenter should be able to recover and function without bringing down the whole operation.

In other words: “If we intend to process data at the edge, then we need to bring data center-like functionality to the edge — the power, the cooling aspects, the security,” Srinivasan says. “We’re essentially designing a localized datacenter for each application. A telecom datacenter within a cell phone tower, or a smart agriculture datacenter in a barn at a farm.”

Many data architects are working to solve these infrastructure issues. “The goal is to solve them in a way in which edge datacenters have simplicity and telemetry and everything needed built in, so you don’t need a person out there in front of every cell phone tower of barn to manage the datacenter.”

A strategy for end-to-end data orchestration

Srinivasan advises that everyone building out edge architecture will need to need to start with a certain mindset: focus on the orchestration of data.

“We know that data is now coming from various sources — the endpoints at the edge,” he says. “Think about what happens to that data next. Even data that’s quickly analyzed and acted upon at the edge is not going to stay at the edge; data needs to be sent over to a final location for further analytics or for longer term storage. The IT architect needs to have an end-to-end thought process that defines their data orchestration strategy.”

BS Teh concurs, emphasizing the challenges of managing and securing the burgeoning data. “As they increase their Edge investment, enterprises must have the right setup to withstand the huge increases in data volume and costs that are coming down the line,” Teh told ETCIO. “Organizations will need to build on their central cloud computing architecture and develop the ability to process — and, equally importantly, securely store — more data at the Edge.”

To learn more about building the new IT architecture, please visit and download the new report Data at the Edge.