The Sage2 Project has launched.

Sage2 is the new follow-on project to the three-year SAGE project, which was funded by the European Commission and coordinated by Seagate and ended very successfully in 2018. Ten partners across Europe came together to build a novel storage platform that could deal very effectively with immense volumes of data generated by scientific simulations as well as by large scale scientific experiments. SAGE demonstrated a storage platform based on Seagate’s proprietary Seagate Mero Object Storage software technology, where the object store worked with multiple tiers of storage based on novel Non-Volatile Memories, Flash and different disk drive technologies seamlessly as part of a single hierarchical storage system driven by Seagate’s object store.

The new Sage2 project will run for three years. Again led and coordinated by Seagate, Sage2 brings together a world-class European consortium of extreme scale applications and software tool developers helping to develop an ecosystem around Seagate’s object store.

While the focus of SAGE was on big data and extreme computing use cases, the focus of Sage2 is AI/Deep Learning. The I/O and storage needs of AI/Deep Learning workloads are not clearly understood.

Watch the video to hear several Sage2 consortium leads describe its goals and challenges:

The challenge presented by diverse data sets

“Science is generating masses and masses of data,” points out Shaun de Witt, Sage2 Application Owner at the UK’s Culham Centre for Fusion Energy. “It’s coming from new sensors that are becoming increasingly more accurate or generating more volumes of data. But data itself is pretty useless. It’s the information you can gather from that data that’s important.”

“Making use of these diverse data sets from all these different sources is a real challenging problem,” says de Witt. “You need to look at this data with different technology. So some of it is suitable for GPUs. Some of it is suitable for standard CPUs. Some of it you want to process very low to the actual data — so you don’t want to put it into memory, you actually want to analyze the data almost at the disk level.”

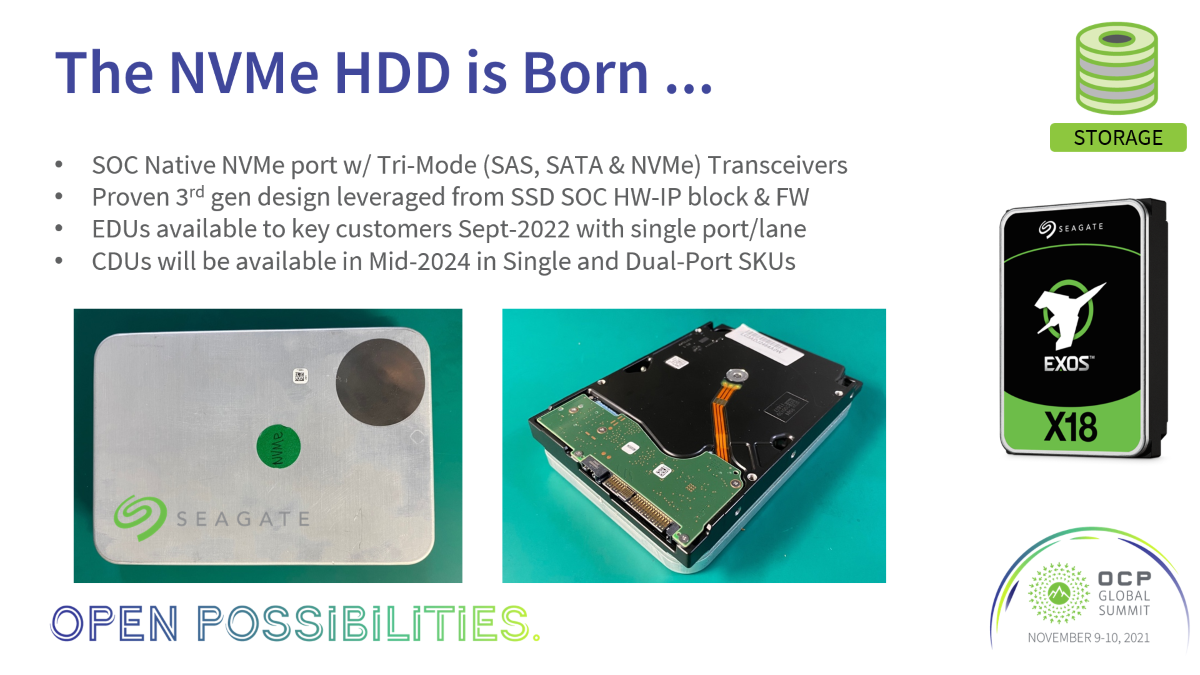

Sage2 will shed some light on that and demonstrate a prototype operating with these new classes of workloads. Sage2 will also focus on optimally using the latest generation of Non Volatile Memories using fundamentally new concepts such as Global Memory Addressing, and studying the use of new low-power processing environments (for example, ARM-based) for storage systems. Sage2 also provides an opportunity to study emerging classes of disk drive technologies, for example those employing HAMR and multi-actuator technologies, for the above workloads.

“The Sage2 architecture actually allows us this functionality,” de Witt explains. “It is the first place where all of these different components are integrated into a single layer, with a high speed network which lets us push data up and down and between the layers as fast as possible, allowing us to generate information from data at the fastest possible rate.”

Making new data use cases possible

According to Pawel Herman, Sage2 Applications Work Package Lead at the KTH Royal Institute of Technology, the use cases being explored for Sage2 really demonstrate the various capabilities of the new hardware to be developed. “There’s a quite broad scope of these application use cases,” Herman says. “They range from deep learning, or even artificial intelligence-based applications, for large scale problems where we have to deal with large amounts of data. But they’re also concerned with physics simulations, they’re concerned with brain imaging data analysis, and they’re also strongly related to fields like radio astronomy.”

The prototype at the Jülich Supercomputing Centre, Germany, used for SAGE and Sage2.

The Sage2 project will utilize the SAGE prototype, which has been co-developed by Seagate, Bull-ATOS and Jülich Supercomputing Centre (JSC) and is already installed at JSC in Germany. The SAGE prototype will run the object store software stack along with an application programming interface (API) further researched and developed by Seagate to accommodate the emerging class of AI/Deep Learning workloads and suitable for edge and cloud architectures.

The first year of the Sage2 project will focus on co-designing/architecting the system and defining the software ecosystem. The second year will focus on implementation. The final year will focus on demonstrating the value of the storage system for these real-world AI/DL applications.

Sage2 is extremely well aligned with extreme computing I/O requirements and the data storage requirements emerging as part of the new IT4.0 era supporting the data and computing requirements of AI/DL. We are creating the necessary pieces of the puzzle and paving the way for building storage system platforms for the exascale era and beyond.

“Sage2 will allow us to investigate completely new areas in completely new ways — ways that will revolutionize science,” says de Witt. “It’s not just about big data, it’s now integrating big data, high performance computing and all the technologies that are available to us now and in the future. It will really be a game changer in the way that we do a lot of our work.”

We will keep you posted on further updates. Stay tuned and follow the developments at sagestorage.eu and Twitter: @sagestorage.

—

Participants in the Sage2 consortium are: Seagate (UK), Bull-ATOS (France), UK Atomic Energy Agency (UK), KTH Royal Institute of Technology (Sweden), Kitware (France), University of Edinburgh (UK), Jülich Supercomputing Centre (Germany), French Alternative Energies and Atomic Energy Commission/CEA (France).