The UN Intergovernmental Panel on Climate Change (IPCC) has reported climate change and its effects are advancing even more quickly and with far greater impacts than models predicted only a few years ago. As public awareness grows and many millions of citizens participate in climate strikes this year, the need for action to try to limit the extent of climate catastrophe we’ll face is ever more evident.

The IPCC’s reports indicate government action on a scale not seen since the United States mobilized for World War II will be necessary in order to redesign and rebuild the world’s energy infrastructure. Meanwhile it’s also incumbent on organizations and companies who’ve committed to bold climate action and to exceed Paris Agreement goals to exploit every means available to understand what actions will best address the climate crisis, in the near term and into the future.

To understand the complex interactions of many planetary factors — including the effects of self-reinforcing positive feedback loops that amplify impacts and trigger further abrupt changes — requires that we deploy enormous analytical power on huge and always-growing data sets. It’s an urgent and daunting task.

Imagine fitting the entire planet inside a computer. Using the world’s fastest supercomputers and big data models, climate scientists are creating computer models of the Earth’s air, water, and land systems at a global scale.

Recreating the climate inside a computer

Countries, companies, and communities are already benefiting from this scale model of the Earth. “It’s allowing us to do work that was science fiction a year ago,” said Dan Jacobson, chief scientist for Computational Systems Biology at Oak Ridge National Laboratory.

Jacobson is referring to the Summit, the world’s fastest supercomputer. The machine operates at exascale levels and its power is undeniable. Summit is capable of running close to three billion billion calculations per second. It recently performed a massive climate simulation in a matter of three hours; on a more typical system this data crunching would have taken 133 years to complete, according to the Oak Ridge National Laboratory. For climate scientists, the value of this kind computing power means new modeling possibilities.

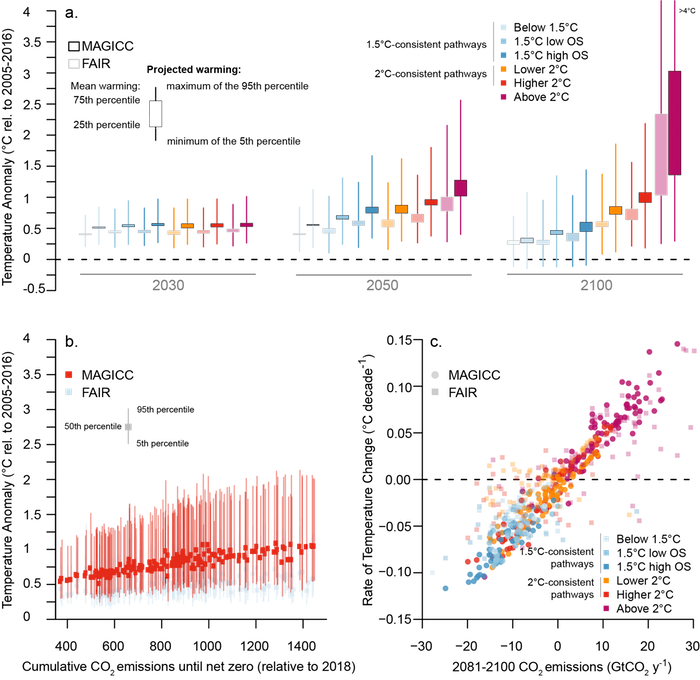

Data from the IPCC special report on impacts of global warming of 1.5 °C above pre-industrial levels, showing projected effects based on emissions. Click the image to see the report.

The climate is a notoriously hard nut to crack — particularly when it comes to creating precision models. Global systems include the interplay of water, temperature, atmospheric gasses, and sunlight, among thousands of dynamic and variable factors. That makes it extraordinarily difficult to forecast the weather in a month, let alone to predict precise changes over decades.

But exascale supercomputing has given researchers an edge. These machines, in effect, compute complex climate interactions through sheer brute force. Researchers input every factor of the planet’s climate — 25- to 100-kilometer sections of Earth’s ocean, atmosphere, and land — as single data points. Then, using sophisticated, high-resolution models, supercomputers can analyze billions upon billions of interactions between these data points simultaneously, across the entire planet.

By precisely replicating global climate conditions, these models offer a better understanding of the mechanisms of climate change — and account for everything from changes in atmospheric pressure and ice melt, to regional fluctuations in precipitation patterns and water temperature. Most importantly, they can predict future changes with unprecedented speed, scale, and precision.

This new computing power offers the closest thing that scientists have to a full-scale model of Earth’s climate. Already, the research is providing invaluable answers to our most pressing climate questions, such as the future impact of climate change on our communities, infrastructure, and energy use.

Tangible benefits of climate supercomputing

As the value of next-generation supercomputing becomes clear, research institutions around the world are developing their own next-gen machines to tackle climate science. For instance, researchers at the National Center for Supercomputing Applications (NSCA), the National Oceanic and Atmospheric Association (NOAA), the Barcelona Supercomputing Centre, and the Argonne National Laboratory are using supercomputers to model the effects of energy production on global temperature, the intensity of hurricanes and tropical cyclones, and the impact of extreme weather on our infrastructure.

U.S. weather forecasting centers are already benefiting from supercomputing science. NOAA, which provides forecasts for extreme weather, recently installed new supercomputers at its U.S. data centers. The processing boost along with 60 percent more storage capacity allows NOAA to run its next-generation forecast model, the Global Forecast System, farther into the future and at higher resolution — to 16 days out, from a previous 10 days, and at 9km resolution, from 13km. This improvement may sound marginal, but it provides weather forecasters and emergency response services with a vastly improved ability to keep the public informed and to effectively deliver critical aid during a disaster.

Meanwhile communities and companies are also benefiting from the research. For instance, telecom giant AT&T — which has spent $843 million since 2016 on disaster recovery — recently partnered with the Argonne National Laboratory to predict the impact of extreme weather on its cellular equipment. While previous-generation computers could only capture results at a resolution of 100km (62 miles) — too broad for analyzing, say, the impact of flooding on a city — the Argonne supercomputer narrowed-down its predictions to a resolution of just 12km (7.5 miles), and a mere 200 meters (656 feet) for flooding data. As regional climate patterns change, computations like these provide service providers with an unprecedented ability to protect vital telecom infrastructure — like buried fiber optic cables.

In general, supercomputers offer the possibility to help us develop solutions to reduce the degree of climate change Earth faces, and also to minimize the massive toll of extreme weather on our economy and infrastructure. In the U.S., fluctuations in weather account for an astounding variation of 3 percent and 6 percent in our annual GDP — a $1.3 trillion swing — and in 2018, extreme weather resulted in roughly $80 billion in damages. With more accurate forecasts and better climate projections, government officials and corporations can help mitigate those costs and ready our cities for weather-related challenges.

Responding to the impacts that we fail to prevent

Supercomputing research also promises to inform how the U.S and the international community respond to changes in climate in the future. While climate scientists understand the big-picture dynamics of climate change, supercomputers will help to refine their analyses. The result: more accurate climate predictions, along with deep insights into the complex interplay between humans, organisms, energy, and the environment.

Oak Ridge National Laboratory, for instance, is conducting research with Summit into how regional environmental shifts could impact the sustainability of our food supply — assessing how certain crops and plants react and adapt to changing conditions like temperature and water and nutrient availability. Such research could inform the practice of precision agriculture, where scientists bioengineer plants to thrive in changing environments. Just as importantly, the Oak Ridge research will also study how climate change relates to the possible spread of human and biological disease. Researchers believe that once-isolated illnesses are likely to thrive in changing regional environments.

Among the foremost of research concerns is the possible future impact on energy production. Research from the Department of Energy’s (DOE’s) Energy Exascale Earth System Model (E3SM) will explore just that — including the two-way dynamic between natural and human activities. For instance, E3SM researchers at the Las Alamos National Laboratory will assess how fluctuations in regional temperature could strain local energy grids, as well as how changing water availability could impact the output of hydroelectric and nuclear power plants. Similarly, researchers will examine the impact that extreme water cycle events, like flooding, droughts, and sea level rise, could have on critical coastal infrastructure. The long-term goal of the DOE: to determine how, and where, U.S. energy policy will need to adapt to a changing climate.

What can I do about the Climate Crisis?

Outside of research institutions, most consumers — and even most business leaders — will never get their hands on a supercomputing climate simulation. But research supported by super-computing is already reshaping the world around them.

Citizens can leverage the knowledge and predictions from the research to pressure their governments and large companies and organizations to take direct action in the most effective and targeted ways based on the evidence, in the face of specific predicted catastrophic impacts to humanity. And the results of the research is sure to influence the global response to the impacts of climate change, and to inform worldwide strategies that policymakers can pursue to keep the planet habitable.

Climate change presents a significant and very concerning set of complex challenges for every citizen in the global community. As advances in supercomputing continue to expand, my hope is that key stakeholders in the climate fight — researchers, governments, corporate entities, and the public — will be better informed, and more prepared, to take stronger and smarter action and put effective solutions in place.