An intermix of requirements: capacity, performance, density, latency, power and TCO

To architect optimal data management and storage solutions for cloud data centers is, to say the least, complex. It requires a breadth of understanding about an intermix of requirements that in some cases contradict each other, and must be managed to complement each other — among others, competing needs for high capacity, high performance, density, latency, power consumption and Total Cost of Ownership (TCO).

Clearly, storage and data management technology for cloud environments will never be served by all one thing. The needs of each data tier dictate which types of purpose-built storage technology must be deployed, for example nearline hard drive designs to ensure less-frequently accessed information is always available, high-performance HDDs for mission critical data, and the SSD tier for instantaneous interaction with immediately actionable data.

Even within this SSD tier there are choices to be made. SSD technologies are expanding and refining their purpose-defined capabilities. This includes both client SSDs and robust enterprise SSDs. When we look at the various markets they fit into, it is important to consider the opportunities for SSDs in the individual markets and workloads in which they’re deployed. One key market is the Cloud.

Given the migration towards hyper-converged infrastructure and the vast computing and storage resources needed to churn through massive amounts of information emanating from the Cloud, SSDs have become a vital component to these deployments. This heavy growth market often consists of massive scaling of homogenous server types utilizing open source solutions, such as OCP or OpenStack, with a key objective of optimizing both OpEx and CapEx. In addition to this, it is important for Cloud environments to meet their end-users’ needs including quick access to real-time data to extract the most value from it.

SSDs account for a growing share of the costs of large-scale application deployments, such as the Cloud. With this, come some specific requirements from the NVMe logical device interface specification to get the most benefit from SSDs. NVMe SSDs offer particularly favorable technology benefits for Cloud providers. As well, SSD vendors have provided SSD options, specific to NVMe, that enable the Cloud to get the most out of their SSD investment. Following are some considerations for both.

SSDs account for a growing share of the costs of large-scale application deployments, such as the Cloud. With this, come some specific requirements from the NVMe logical device interface specification to get the most benefit from SSDs. NVMe SSDs offer particularly favorable technology benefits for Cloud providers. As well, SSD vendors have provided SSD options, specific to NVMe, that enable the Cloud to get the most out of their SSD investment. Following are some considerations for both.

How cloud data centers get the most from their SSDs

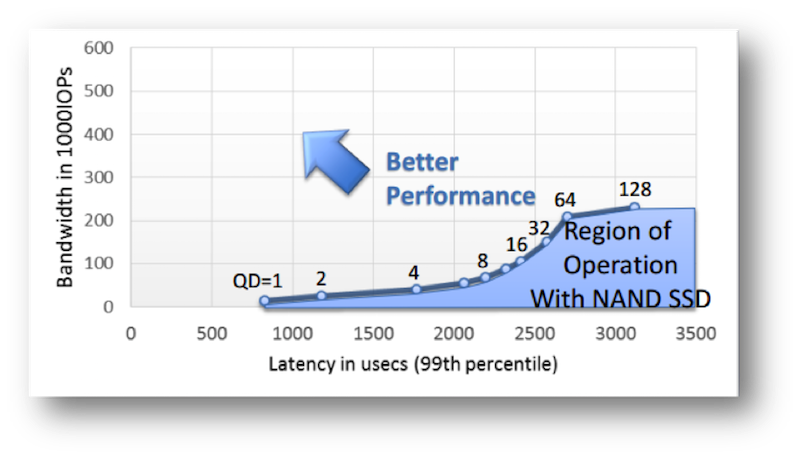

For Cloud environments to get the most out of their SSDs, there are a couple items to remember. First is cost for performance, or $/IOPs, to achieve the best CapEx. While $/GB is also important, this can be achieved by including HDDs for less frequently accessed data, and effective cost-to-performance ratios can be achieved by deploying SSDs alongside HDDs and dynamically moving information between different storage media types to meet space, performance and cost requirements. It is also key for administrators of the Cloud to make workloads SSD-friendly, keeping the overprovision (OP) space to a minimum, to achieve high performance well as to address sub-optimal performance and endurance immediately. Monitoring and tuning the data center as this comes up will ensure optimal performance and a long SSD lifespan.

NVMe technology, or Non-volatile Memory Express, evolved from the cooperation of at least 90 technology industry leaders, including Seagate, resulting in NVMe specifications which are publicly available. This interface specification seeks to enable SSDs to make the most effective use of the PCIe bus. Operating at the host controller, NVMe is a defined command set and feature set for these SSDs to achieve higher performance and efficiency across a wide range of enterprise environment. And, instead of taking on the work of designing an in-house SSD solution that needs to be modified for each NAND generation, NVMe offers a way to leverage an open standard to enable applications and file system to have more direct control on SSD behavior. As mentioned, features of the NVMe technology also make this technology quite compelling and some, specifically, compelling for the Cloud. These include:

Attribute Pools and Banding

The NVMe standards include specs for implementing Quality of Service (QoS) by defining bands – also termed attribute pools. These pools have certain characteristics similar to SAN devices virtualizing a pool of disks to virtual FC or iSCSI targets. These pools are often associated with capacity, performance, endurance and longevity. These attribute pools are defined per namespace and a single attribute pool can span across multiple NAND physical storage with each namespace possibly having one or more attribute pools. To provide management for these pools, the host specifies the requested QoS by using the Management Attribute Pools feature. This feature enables the band to expose specific characteristics such as a latency range or a bandwidth and IOPs range. The benefits of such a feature enable multiple workloads to be stored on the same NAND while optimizing their specific workload characteristic instead of storing them inefficiently (with additional and unnecessary costs) on multiple NAND devices.

Dataset Management and Hinting

NVMe Dataset Management (DSM) commands provide an extensive hinting framework with a dedicated “Dataset Management Command” to specify hints for optimizing performance and reliability per IO. Hinting includes IO classification, policy assignment and policy enforcement. IOs are identified or grouped by classifiers. Storage policies are then assigned to classes of IOs and objects which are then enforced in the storage system. Several of these policies optimize NAND access. This includes a priority queue which can prioritize the IOs based on their urgency. This allows a hint to pass directly from the host to the NVMe device and pass it on as a DSM command out-of-band for pre-fetch (to attempt to satisfy the upcoming read requests). In addition, the host may indicate whether a time limit should be applied to an IO operation by setting the Limited Retry (LR) field which sets a time limit for retrying an IO error. Without this, a host would continually apply all error recovery means to complete the IO operation slowing IO completion, therefore, this feature enables faster overall IO completion. Through DSM and hinting, mixed workloads can be tuned on an IO basis with these features to improve performance.

Garbage Collection and Directives (aka Streaming)

Writing data to SSDs can be a several step process. As data cannot be directly overwritten on flash, old data must first be erased, after which new data may be written. The process by which the flash reclaims the physical areas that no longer have valid data is called “garbage collection.” This process requires consolidating valid data from a flash block, writing it to a different block, and then erasing the original block which then removes the invalid data and frees up the space it was consuming. This obviously can often limit response time and transaction rates. With directives (multi-streaming) in NVMe, streams allow data written together on media so they can be erased together which minimizes garbage collection resulting in reduced write amplification as well as efficient flash utilization.

The streams represent groups of data that belong to the same physical location and hence can be allocated and freed together. The upcoming NVMe standards for streams specify directive control commands and stream identifiers for IO write commands, which in turn serve as hints to identify the related group of data.

Coupled with this, the upcoming NVMe standards for directives also specify accelerated background control that enables control over garbage collection, wear leveling, and provides a mechanism for the host to schedule drive maintenance at a time that does not conflict with a critical time for host applications. If the host is able to predict idle time when there are few read requests and few write requests to the controller, then the host may send commands to the controller to start or stop host-initiated accelerated background operations. As a result, accelerated background operations may be minimally overlapped with read requests and write requests from the host.

IO Determinism

IO Determinism is a recent proposal in the NVMe standard that is mainly driven by Cloud providers. With IO determination, the main focus was given to latency and parallel execution of IOs without any conflict or overhead. This enables well-behaved hosts to treat an SSD as many small sub-SSDs and process IO in parallel in each small sub-SSD. This will enable host threads to process IO independently in small sub-SSDs without blocking from other thread IOs. With a traditional SSD, if thread 1 issues a write to a region in namespace, then thread 2 is unable to read data from same region even if logical blocks are not same. In this new concept, thread 1 and 2 can use two different sub-SSDs so they will not have any conflict and both can run fully independently to each other. These sub-SSDs are also called parallel units (PUs) and are created in different physical NVM. This concept is still evolving in the NVMe standard. Ultimately, this will provide predictable latency for 6- 9s (or 99.9999%).

Benefits with IO Determinism

More usable capacity, lower cost, efficiency and more predictable performance

Not all SSDs are created equal. It is clear the optional features available in the NVMe standard enable applications, especially the Cloud, to manage SSD behavior to their benefit. Due to this additional serviceability of these drives, Cloud customers can take advantage of more usable capacity, lower cost, efficiency and more predictable performance.