“Memories. You’re talking about memories.”

— Deckard, Blade Runner (1982)

From Blade Runner to Minority Report to The Man in the High Castle, author Philip K. Dick’s fingerprints are all over contemporary science fiction film and TV. Dick’s enduring inspiration is easy to understand. He tells compelling stories, full of complex characters and dizzying technological what-ifs. He paints plausible visions of the future, animated by big questions. For instance; “What does it mean to be human?”

In the seminal 1982 movie, Blade Runner, the intriguing answer to this question is memory. The androids (“replicants”) have human memories implanted so they can function and “feel” like real people. When someone suggests their memories are not real — or not really theirs — it doesn’t compute.

Humans have memory. Replicants have memories. So, replicants must be human, right?

This is really important.

Elie Wiesel famously said; “Without memory, there is no culture. Without memory there would be no civilization, no society, no future.”

As the global datasphere explodes — and the amount of data we generate threatens to overwhelm our capacity to “remember” it all — memory will be key.

What if we had to choose what to remember and what to forget? How would we decide what data (or memories) to keep and what to discard? Such questions give a sense of what’s at stake if data management and storage capabilities don’t keep up with the dataspheric storm.

We humans have an insatiable appetite for creativity and making data. So, the systems that enable us to capture, store, secure, manage, analyze, rapidly access, and share data must be equally creative and inventive.

Dataspheric storm-watch

In late 2016, IBM calculated that 90 percent of all data in the world had been created in just the previous two years. A few months later, in April 2017, IDC was predicting a 10-fold increase by 2025 in the amount of data created annually — from 16 zettabytes to over 160 zettabytes.

As we’ve noted before in this blog, it’s not just the unfathomable volume of data that we have to manage in the datasphere. It’s the other three Vs, too — variety, velocity, and veracity.

Put another way, it’s the cumulative effect of billions of additional endpoints (devices, sensors) generating a tsunami of increasingly unstructured data, all in real time, with an overriding need to balance service level agreements and life-critical decision-making with data security and end-user privacy.

Phew!

Although this is truly a paradigm shift in the making, we’re not completely at the mercy of the dataspheric storm. We’re already working on solutions to expand capacity, performance, and security with zero to nominal additional cost.

Although this is truly a paradigm shift in the making, we’re not completely at the mercy of the dataspheric storm. We’re already working on solutions to expand capacity, performance, and security with zero to nominal additional cost.

If Hyperscale data centers proliferate, how will storage keep up?

Hyperscale and hyper-converged data centers are proliferating and absorbing an ever-increasing share of the world’s data. So, data storage systems must scale in concert.

Hyperscale storage has two essential capabilities — capacity and agility. It must be able to store vast quantities of information, and be able to ramp up rapidly, efficiently, and indefinitely — according to user demand.

Hyperscale storage is generally implemented in large data environments — like hyperscale data centers — but also cloud computing environments, large Internet/web services infrastructures, social media platforms, large government agencies, and academic or scientific research entities (like genomics research).

Storage suppliers are innovating to keep pace. In Blade Runner 2049 — the 2017 sequel — old data is encoded and stored in DNA, but it will likely be a while before we see such storage innovation bear fruit outside Hollywood.

Of course, even the general public has become almost too familiar with the concept of “cloud” storage over the past many years. But in the words of Michael Manos, Microsoft’s former general manager of data center services, “the cloud is just giant buildings full of computers and diesel generators.”

There are multiple innovative, leading-edge technologies that help enable continued rapid growth in hyperscale storage. For example, there’s a cluster of software-based innovations — chiefly virtualization and software-defined storage. There’s also a ton of innovation in solid state drives (SSDs) and hybrid arrays (which combine SSD with hard drives). And there are “lower-tech” innovations such as new data center rack designs that let you fit more individual drives in the same physical space.

But these strategies alone won’t build the hyperscale storage arrays of the future. Remember, hyperscale data centers have to balance a complex set of requirements — performance, capacity, reliability, and cost (typically calculated as IOPS per TB). Moreover, “hyperscalers” already depend on a huge installed base of traditional hard drives in standard formats and footprints. Realistically, they need immediate scalable solutions that easily expand the infrastructure today.

Consequently, innovations in hard drive technology will continue to be a central enabler to meet the growing demand for hyperscale storage.

If hard drives are central, both capacity and performance must scale

Seagate’s approach to hard drive innovation plays out on two fronts — capacity and performance.

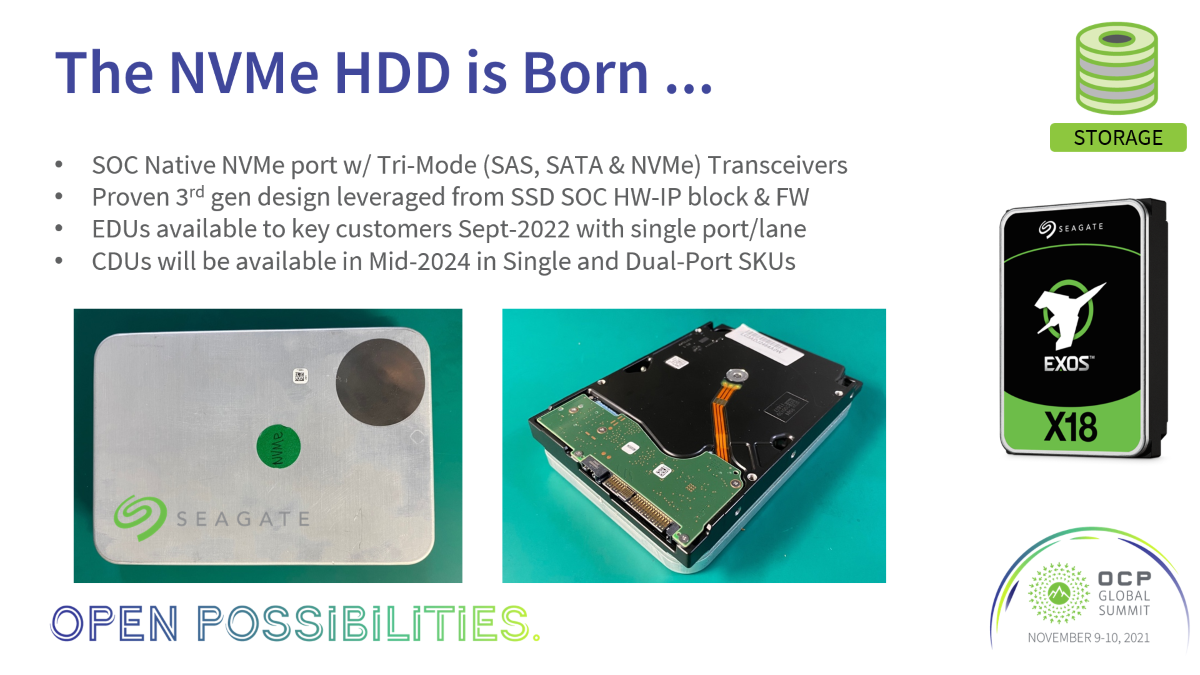

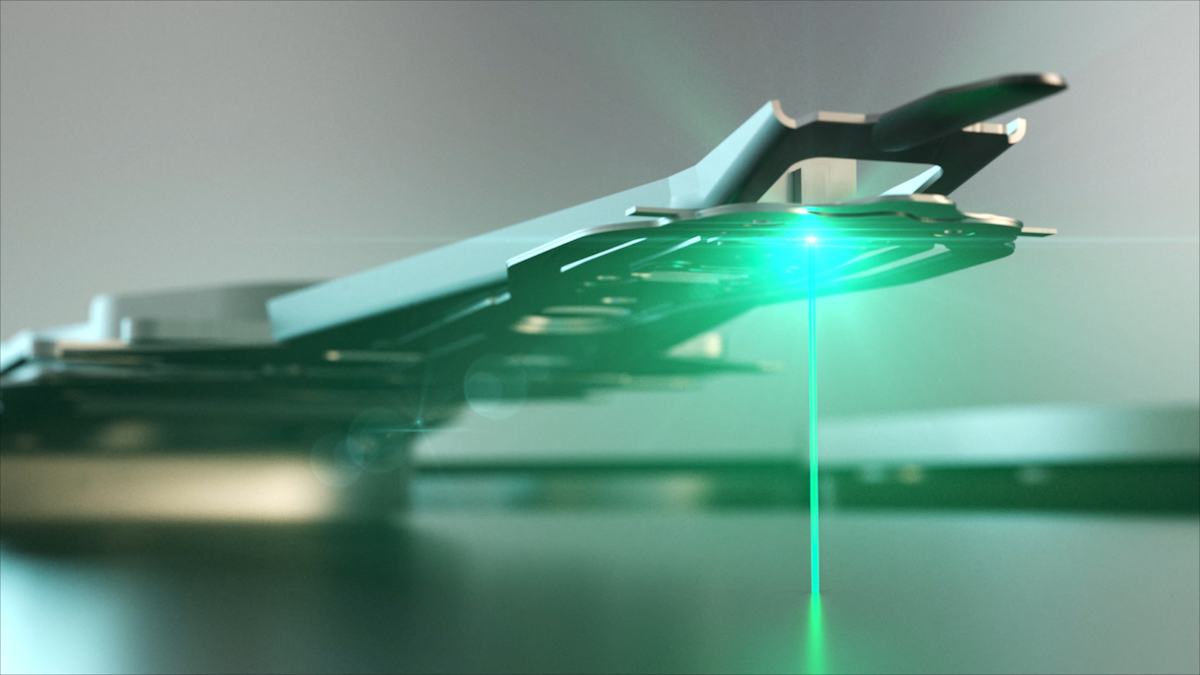

On the capacity front, heat-assisted magnetic recording (HAMR) utilizes a new kind of magnetic disk coating and tiny lasers, attached to the recording heads, to pack “grains” of data more densely on the disk. This allows us to store much more data on an otherwise standard hard drive.

On the performance front, MACH.2 multi-actuator technology uses two independent actuators per drive to literally double the speed of data retrieval on an otherwise standard hard drive.

Together, these innovations will help hyperscale providers weather the coming dataspheric storm.

What’s behind the datasphere?

When we survey the datasphere — looking for examples of exploding data volume, variety, velocity, and veracity — a handful of applications are clearly at the vanguard.

Reinventing social media

When Mark Zuckerberg says, “I actually am not sure we shouldn’t be regulated,” he does have a point. If there is a dataspheric storm on the horizon, social media platforms are probably in its eye.

Social media constitutes the largest repository of unstructured “big data” on the planet. The volume, variety, and velocity of social media data are head-spinning.

And the data management demands can be breathtaking. Simply loading a user’s home page requires hundreds of servers, tens of thousands of data points, and sub-1 second page loads.

Beyond that, industry heads likely understand that the biggest challenge for social media over the coming months will be the 4th V — veracity. This will require big changes in how these platforms handle, process, and store data. The Facebook CEO has outlined a solid multi-pronged plan to address key issues that emerged recently. They were already experiencing massive organic evolution in their business model, with large, complex changes in their data storage strategy — for instance, the exponential growth of video, virtual reality (VR), and augmented reality (AR) on social sharing platforms. Heightened scrutiny of their privacy strategy will continue to up the ante on that evolution — more or less in real time.

Data, not doping

In 2014, Team Germany crushed Brazil 7-1 in the first semi-final of the FIFA World Cup. They famously used an Adidas big data technology called miCoach to monitor player performance in training sessions and optimize training routines and on-field performance.

This is not an isolated case. Professional and amateur athletes and trainers are using all manner of datasphere technology. Dotsie Bausch and the 2012 US women’s cycling team used mountains of biometric data to prepare and train for their long-shot attempt at Olympic success. Using “Data, not Doping” as their mantra — against a backdrop of the Lance Armstrong scandal — Dotsie and team won Silver as complete underdogs, all by leveraging multi-parameter performance tracking, big data, and robust analytics.

As the “datafication” of sports grows from widespread to ubiquitous to universal — all sports at all levels all over the world — demands on capturing, managing, and storing all that data will only increase.

Remember the “information superhighway?”

Every autonomous car is expected to generate about 40TB of data for every 8 hours of operation — about the same amount as 3,000 people. Moreover, most of the data will have to be created and shared with every other autonomous car in the vicinity, in real time.

Most of the data will also have to be stored — to resolve accidents for insurance claims, to feed metrics back to car manufacturers, to enable municipalities to optimize traffic patterns, and so on. That’s a humongous new payload that will be added to the datasphere.

It will be made even more humongous once we realize that an Internet-enabled autonomous car can also pay for fuel, tolls, and parking. Not to mention narrating a tour of the city for its occupants while communicating with other “smart devices” it encounters along its way.

Autonomous cars will be key drivers of edge computing — where more and more compute, analyze, and store functions will be made at the endpoints, not the core of the network. Whole new data storage protocols and processes will have to be invented for this brave new world.

Real-time revolution… in business

There was a time when all enterprise data was structured — more or less stable, easily queried — and it would take several days to run the right report. And everybody was OK with that.

That’s now a fading memory.

The avalanche of unstructured streaming data that inundates most businesses today is both a blessing and a curse. Today, harnessing it can be an enormous competitive advantage. Tomorrow, it may be table stakes for surviving (let alone thriving) in the datasphere.

The real-time revolution affects every aspect of business — from marketing and sales to finance, supply chain, and even facilities management.

Hence the advent of real-time analytics — providing visibility to the full spectrum of business processes and events while they’re actually happening. Simultaneously mining structured and unstructured data to enable real-time decision-making.

There are obvious benefits to real-time analytics. A business could detect an opportunity and react before its competitors. Or they could see a threat unfolding and intervene to mitigate the impact. We can see this era already in action: today a credit card company can monitor purchases, detect unusual patterns, and intervene — perhaps requiring a merchant or cardholder to call for authorization — thereby preventing fraud.

While some consumers might view this level of real-time surveillance as creepy, others will embrace it — and reward businesses who deploy the technology. Either way, this raises the bar for businesses of all sizes and shapes to invest in data capture, management, and storage capabilities.

The takeaway from all this is clear.

Memory is the foundation of our society, our civilization, our future. As the datasphere unfolds, the integrity of our collective memory will depend on the ability of our data storage systems to keep up with the volume, velocity, variety, and veracity of our data.

We got this!