Deepfakes — the manipulation of digital data to create convincing phony images and video — have emerged as a significant threat to national security.

That’s the view of program managers at the Defense Advanced Research Projects Agency (DARPA), the blue-sky research agency of the U.S. Department of Defense. And just as DARPA has responded to threats from other channels — from missiles to computer hacking — it’s now at work on countermeasures for deepfakes.

The progress of DARPA-funded researchers around the world reveals the breadth of the deepfake threat, along with the technological solutions that are evolving just as quickly to combat it. It also offers a glimpse of the future of automatic deepfake detection capable of spotting fakes as soon as their creators upload them to media platforms.

The deepfake threat

Media manipulation is as old as photography itself. As early as the Civil War, commercial photographers combined images to create more lucrative composites. Later, movie studios created increasingly sophisticated special effects using manipulated film and then video.

Now, thanks to the rise of affordable computing power and open source software, just about anyone with a modicum of skill and patience can get in on the action. That includes bad actors seeking to influence elections, manipulate stock prices, thwart justice, or harm women.

“A high-end gaming computer will do,” DARPA program manager Matt Turek told attendees of DARPA’s AI Colloquium in Washington in March, regarding what’s needed to create deepfakes. “It’ll likely take hours to days’ worth of training time, depending on how much training data you have and the capabilities of your GPU,” he said.

Turek heads DARPA’s Media Forensics (MediFor) program, which funds researchers to develop software and AI that can spot deepfakes automatically.

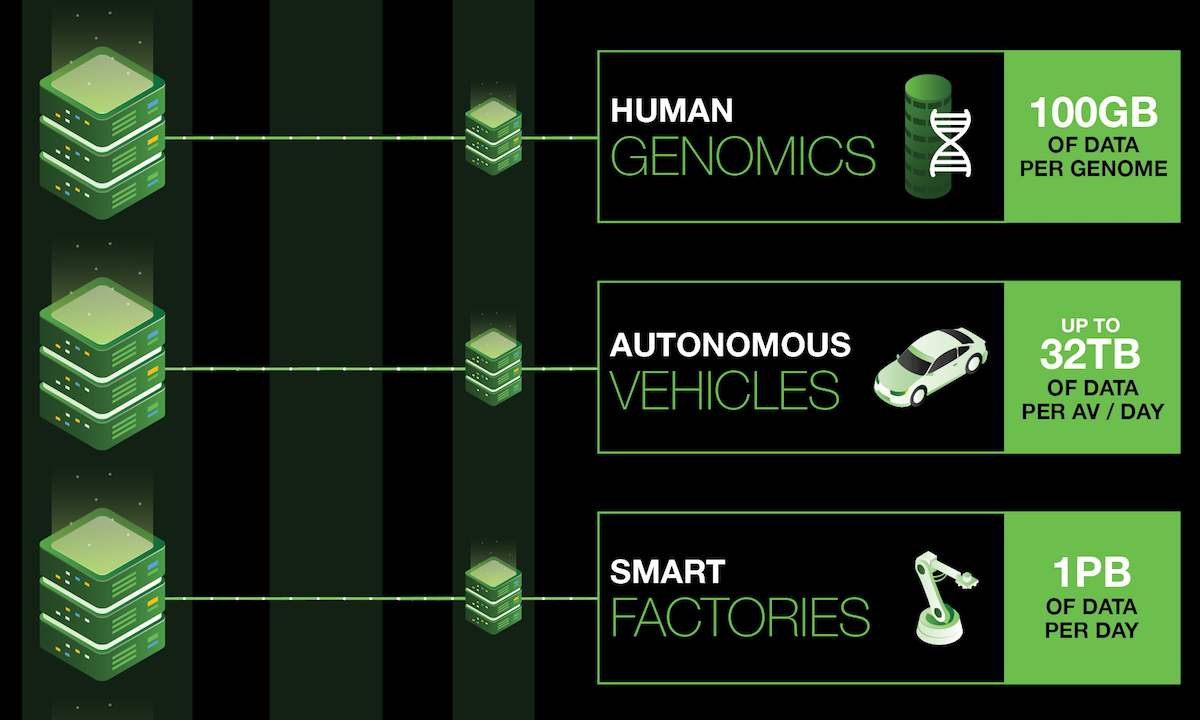

Given the volume of video uploaded to the internet every day — 500 hours a minute to YouTube alone — it’s not feasible to rely on human brainpower to spot deepfakes, even with the help of trained experts. Instead, countermeasures will have to rely on software and AI to detect them.

3 ways AI can spot deepfakes

Automatically spotting deepfakes requires training software to process massive amounts of data in order to detect manipulation in three main areas, explained Turek at the DARPA AI Colloquium:

- Digital integrity: All of the pixels in an unaltered image or video will match the digital “fingerprint” of a given type of camera. Interruptions in the pattern from other sources such as manipulation software are giveaways of manipulation.

- Physical integrity: The laws of physics may appear to be violated in a manipulated image. For example, adjacent objects might seem to cast shadows from different sources of light.

- Semantic integrity: The circumstances of a real video or image will match known facts about those circumstances. For example, a video purporting to represent a given place and time should match the actual lighting conditions and weather — if outdoors — of that place and time.

Researchers at Adobe and the University of California at Berkeley are among those tackling the first challenge, that of spotting compromised digital integrity. In June, Adobe announced that an Adobe-UC Berkeley team funded by DARPA had created a system for automatically detecting facial expressions that had been manipulated using the company’s popular Photoshop product.

Moreover, at the University Federico II of Naples, Italy, DARPA-funded researchers have trained software to recognize the digital “noise,” or patterns of pixels produced by digital cameras. They run suspect images through the software to spot clusters of pixels that exhibit different noise patterns and thus might indicate compromised digital integrity and manipulation.

Back in the U.S., scientists at the University of Maryland are working with colleagues at UC Berkeley, again with DARPA funding, to tackle the second challenge: verifying the physical integrity of images and videos. Their Shape-from-Shading Network, or SfSNet, determines whether the lighting on faces in a given scene is consistent. Any face that doesn’t match the lighting of others in the scene likely came from elsewhere.

The future of deepfake defense

While optimistic about the future of deepfake detection, Turek acknowledged that deepfake detection is a moving target. “We have a bit of a cat and mouse game going on here,” he said at the AI Colloquium. “We build better detectors, they build better manipulators, we build better detectors.”

One saving grace: bringing lots of computing power and expertise to bear on spotting deepfakes makes eluding detection significantly harder. “Perhaps if we raise the bar high enough,” said Turek, “we’ll take this out of the hands of an individual manipulator and put it again as a capability for higher resource manipulators.”

Formerly exotic technologies developed by DARPA often have a way of making their way into the mainstream — the internet itself, for example, and the realization of autonomous vehicles.

Given that history, the detection algorithms created through the MediFor program could soon benefit everyone. “Such capabilities could enable filtering and flagging on internet platforms,” said Turek, “automatically and at scale.”