A couple of years ago I got a DSLR (digital single-lens reflex) camera. After using a compact digital camera, the DSLR opened a new world of photography for me. It was great to have the option to shoot six frames per second, use different lenses and fine-tune shutter speed, exposure and other parameters.

Learning to take my photography to a higher level, from auto to manual settings, was quite an experience. Through research and talking to friends and photographers, I discovered that I needed to learn these fundamentals:

- Shutter Speed (time the sensor is exposed to light)

- Aperture (how much light the lens will allow in)

- ISO (sensor sensitivity to light)

Experimenting with each of these variables was a frustrating test of Murphy’s Law. Just when I thought I had found the right setting for, say, shutter speed, the other two would be thrown out of whack. You start to get used to shooting in some conditions, like low light, but it’s always a balancing act to understand the relationship among the three settings and how they influence each other. Should I go auto, do what the camera dictates, and settle for unsatisfying results, or go manual and struggle to get the results I want?

Fundamentals of database performance

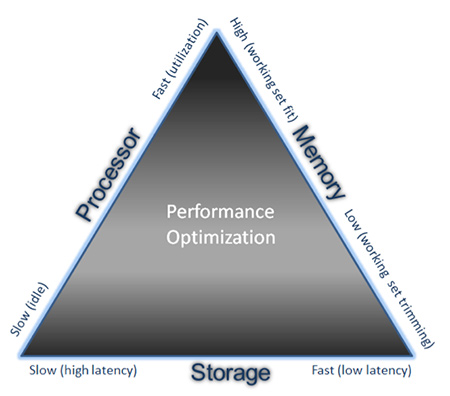

At Seagate, I do a lot of database performance testing of systems, and it reminds me of photography. Many factors can affect system performance, but these are the fundamental ones:

- Processor (instruction IO execution)

- Memory (volatile data storage)

- Storage (non-volatile data storage)

Consider the many systems that sport fast multi-core processors but use slow non-volatile data storage that bottleneck performance. As for memory, this IT maxim comes to mind: “Memory is like time. You can never have enough.” It would be great to always have enough memory for your working set, but this is seldom the case for large, high-transaction systems. With database systems, maintaining enough data in memory requires retrieving data from slower non-volatile data storage or, depending on the operating system resources available, reducing the working set of data that resides in memory.

Striking the right balance among the workload performance of the processor, memory and storage is key to optimizing database system performance.

Server components: Working together to optimize system performance

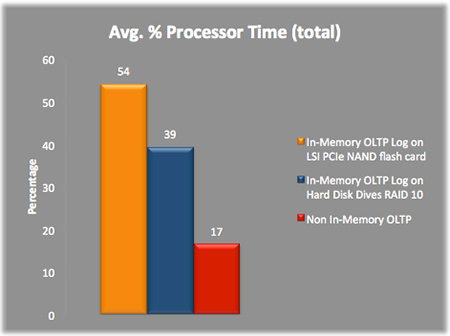

Non-volatile storage traditionally has been the system bottleneck, but this is changing with the growing adoption of fast PCIe® NAND flash and is evident with in-memory database (IMDB) systems. I recently tested the customer preview of SQL Server 2014 In-Memory OLTP (online transaction processing), with the durable tables feature, code-named Hekaton, on Windows Server® 2012 R2.

With SQL Server 2014, tables and indexes reside in-memory, not in disk-based storage, significantly increasing the performance of short, highly concurrent transactions. Even with this fast in-memory processing capability, there is a performance tradeoff to maintain durability (See my post “In-memory shopping tip: Look for durability as much as speed.”) Keep in mind that SQL server logging still needs to be performed on non-volatile storage, so the faster response of the storage the better.

Average processor utilization during workload testing of log file on various non-volatile data storage technologies.

With SQL Server logging, if the log file on non-volatile data storage is not fast enough, the processor will be underutilized. One way to shift more work to the processor is to deploy the Seagate Nytro™ WarpDrive® card for faster log writes. WarpDrive is one of several Seagate non-volatile NAND-flash storage products that can help boost the performance and efficiency of your server components.

System performance optimization is an art that involves testing workload types. Changing one component affects the others, so striking the right balance among the server components is key to getting the results you want. Deploying the fastest processor available might seem like the best, most obvious way to goose system performance, but it’s even more important to optimize processor, memory and storage performance to the specific workload.

Leave A Comment