Everyone knows the future of self-driving cars depends on — and is helping propel — big advances in artificial intelligence (AI). But autonomous vehicles need a lot more than just AI. Autonomous vehicles use sonar, radar, LIDAR, and GPS to sense and navigate through the environment. In the process, they generate massive amounts of data.

That data must be available to the AI onboard each vehicle, and also in edge data centers where further analysis is performed. That’s why although AI may be the wunderkind of autonomous vehicles, its siblings data management and storage at the edge and next-gen communications technology are just as essential (if less likely to grab headlines).

What do autonomous vehicles need? Sensors, AI, edge storage, communication …

What tools do autonomous vehicles need? First, it depends how “autonomous” we’re talking about. Self-driving car technology is an evolutionary revolution, and there are varying levels of autonomy today.

Of course, many cars on the road today have no autonomy at all. Some cars have function-level controls that assist a driver, such as stability controls. Others combine multiple function-level controls to perform more complex tasks. For example, lane centering in combination with automatically adjusting cruise control can help avoid accidents on fast-moving, congested highways. In limited self-driving cars, the vehicle can control all safety functions in some circumstances but drivers are available to take over at any time. Over 70 city-based test programs have drivers ready to take control of vehicle. Fully autonomous vehicles are not yet generally operating on today’s streets; when they do, they’ll only require humans to input a destination and navigation preferences!

Several kinds of technologies are needed to enable the automotive sector to achieve the limited and fully autonomous levels.

Global positioning systems (GPS) tell a vehicle where it is. GPS devices are now available as systems on a multi-function chip, which includes application-specific compute modules to carry on the intensive calculations to derive accurate location information. The inputs to these calculations are data from at least four low-earth satellites. Some GPS chips, like the Linx Technologies F4 Series GPS Receiver Module, can track up to 48 different satellite signals. Edge storage plays a key roll in keeping details of where the vehicle has been.

In addition to knowing where the vehicle is, autonomous vehicles need to know what is around them. Multiple cameras are used to provide a full, 360 degree view of the surrounding area. Each camera, of course, generates two-dimensional images represented by an array of pixels, and each pixel requires multiple data coordinates for color and light intensities.

MIT’s ShadowCam sees around corners; TrackNet simultaneously detects and tracks objects

Advances in AI, particularly deep learning, have enabled sophisticated image analysis, including the ability to track objects across multiple images and analyze human poses. MIT researchers recently announced a “ShadowCam” system that uses computer-vision techniques and focuses on changes in light intensity to detect and classify shadows moving on the ground, including changes that are very difficult for the human eye to detect. The technology aims to help autonomous vehicles “see around corners” to identify approaching objects that may cause a collision.

Dirty cameras, low-intensity environmental light, and poor visibility can limit the quality of data from cameras, so LIDAR (Light Detection and Ranging) is also used to collect data on the near environment. LIDAR uses pulsed laser light to measure distances and detect the range of objects and then create three dimensional point clouds, which are used to analyze and reason about objects in the environment.

These kinds of analyses needs to be done onboard the vehicle to avoid delayed decision making; capturing and storing the 3D object mapping and image data from multiple always-streaming cameras places additional demand on edge storage.

Self-driving cars must interact with each other and with edge infrastructure

GPS and vision systems provide autonomous vehicles with information about their nearby environment. A single vehicle can collect only a limited amount of information about the longer range environment, but by sharing information between vehicles, each vehicle can acquire a better understanding of conditions in a broader area. Vehicle-to-vehicle (V2V) communications systems create ad hoc mesh networks between vehicles in the same area. V2V is used to share information and send signals, such as proximity warnings, to nearby vehicles.

V2V can also be extended to communicating with nearby traffic infrastructure, like traffic lights. This is known as vehicle-to-infrastructure (V2I) communications. V2I standards are in the early stages of development. In the United States, the Federal Highway Administration (FHWA) has issued guidance information on V2I to help advance the technology. According to FHWA administrator Gregory Nadeau, the benefits of V2I extend beyond safety: “In addition to improving safety, vehicle-to-infrastructure technology offers tremendous mobility and environmental benefits.”

Longer range communications will increasingly depend on 5G cellular technology, which can provide up 300 megabits per second (Mbit/s) bandwidth with 1-millisecond latency. Huawei recently announced a 5G component designed specifically for autonomous vehicles.

How to handle edge data management in the thousands of exabytes

Data management and storage is key challenge to realizing fully autonomous vehicles. These vehicles can generate between 5TB and 20TB of data per day — that’s for each vehicle. With more than 272 million cars on the road today in the US alone, in a future when all cars are autonomous that could hypothetically add up to 5,449,600,000TB (or 5,449 exabytes) captured — per day — just in the US. A high-performance, flexible, scalable and secure edge storage infrastructure will be essential to capture this data. Managing that data to derive as much value as possible with efficiency requires sophisticated data orchestration capabilities.

Real-time decision making by AI requires the latest information. Historical information, such as data about the vehicle’s location and speed an hour ago is not usually important for onboard AI. However, vehicle designers and autonomy and safety engineers will want access to that data. AI engineers depend on large volumes of data to train machine learning models that classify objects and motion in scenes, identify and analyze objects in LIDAR data, and optimally combine environment and infrastructure data to make decisions. Safety engineers will be especially interested in data collected by vehicles before accidents or close calls. Once again, data management at the edge will be a critical enabler of this essential piece in the development and deployment of autonomous vehicles.

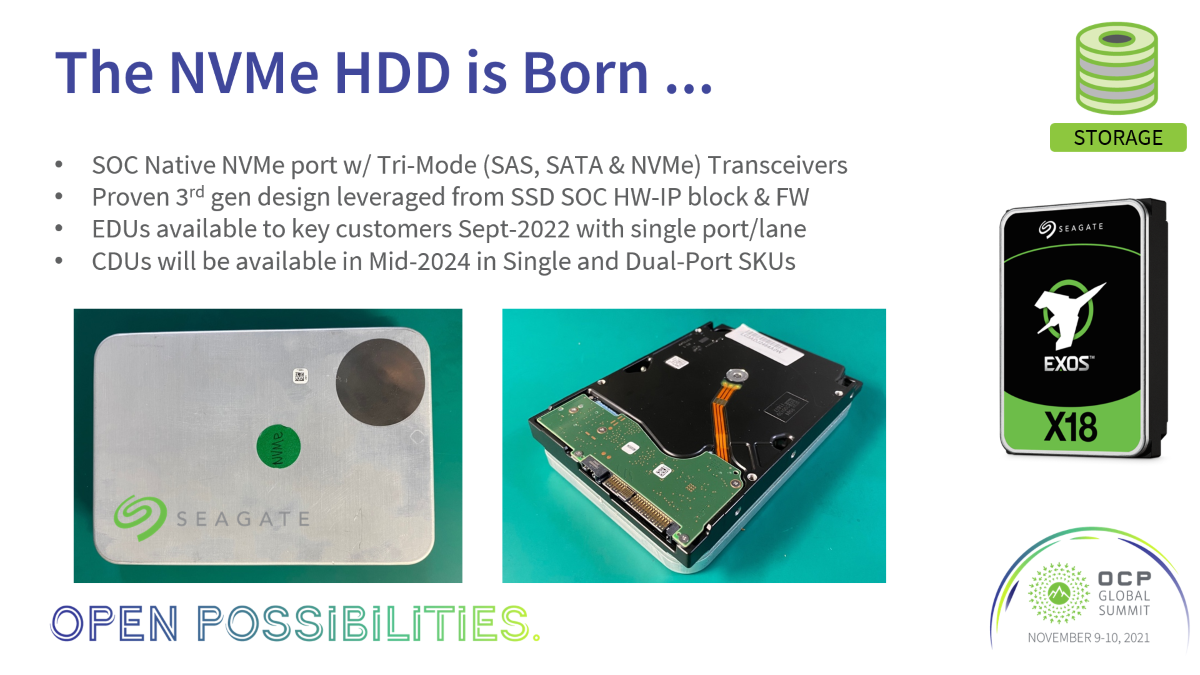

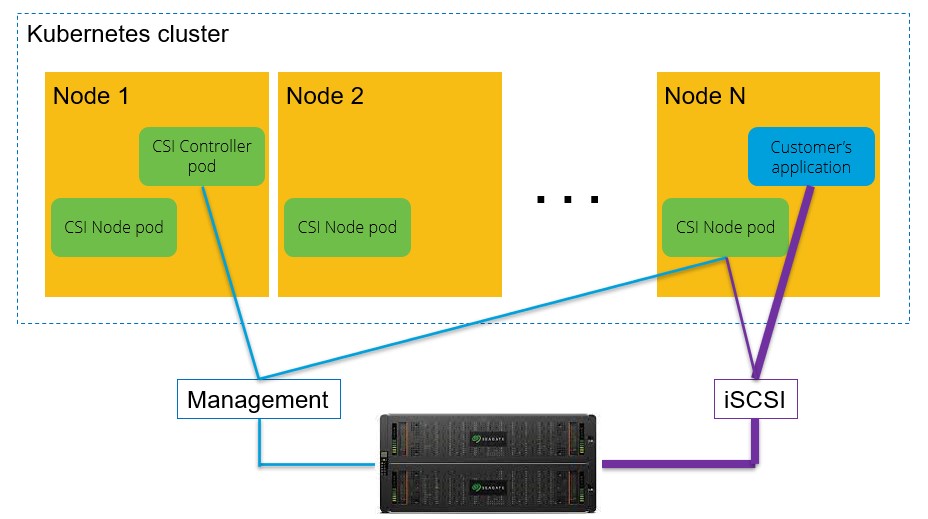

As the data generated and collected by autonomous vehicles moves from the vehicle to edge data centers and ultimately is also transmitted to on-premises and cloud data centers, optimized and tiered storage architectures will maximize active and efficient uses of the vast volumes of data. Recently acquired data being analyzed for immediate understanding and used to build machine learning models will require high throughput and low latency, and may be managed on fast access storage systems based on SSDs and high-capacity HAMR drives equipped with performance enhancing multi-actuator technology.

After the majority of analysis is done, data can remain available but stored more efficiently on high-capacity, lower-cost traditional nearline storage. Nearline storage serves well when some data may be needed in the near future but most of the data will not be accessed regularly. Older data that is likely not to be accessed but is kept for compliance or other business reasons can be moved to the archival tier.

Autonomous vehicles are pushing the boundaries of artificial intelligence, communications, and storage. All three technologies are essential to achieve the goal of fully autonomous vehicles. To learn more about the role of data at the edge, check out some of our related articles including Only Data at the Edge Will Make Driverless Cars Safe, 5G Will Enable AI at the Edge — If the IT Infrastructure Keeps Up, and Data at the Edge: How to Build the New IT Architecture.